Generating High-Quality Stylized Images

In this article, we explore the process of stylized image customization using PhotoMaker. Previously, we discussed generating realistic photos. Now, we shift our focus to creating high-quality, artistic images using only a few reference images.

We will guide you step-by-step, from installing the necessary libraries to generating and visualizing your customized images. The process involves integrating two specific models into the inference pipeline: the SDXL Unstable Diffusers model for base image generation and the xl_more_art-full model for applying detailed artistic styles.

Theory Applied to Stylized Images

The process of stylized image customization with PhotoMaker builds upon the foundation of Stacked ID Embedding and diffusion models.

Stylized Image Generation

To generate stylized images, PhotoMaker combines identity fidelity with artistic elements defined by the text prompt. This involves several key steps:

1. Embedding Extraction and Fusion

- The process begins by extracting embeddings from a few reference images. These embeddings capture the unique identity features of the subject.

- Each image embedding 𝑒𝑖 is then fused with a class word feature vector 𝑐 that specifies the desired style (e.g., “art by Van Gogh”, “fantasy”).

- This fusion ensures that the identity features are contextualized with the relevant stylistic information.

2. Stacked ID Embedding

The fused embeddings are concatenated to form a comprehensive ID embedding.

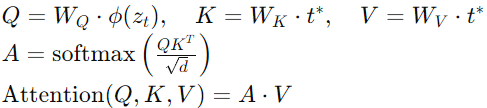

3. Cross-Attention Mechanism

- The cross-attention mechanism integrates the stacked ID embedding into the text-to-image generation process. This allows the model to focus on both the identity features and the specified style.

4. Generative Process

- Finally, the diffusion model uses the updated text embedding 𝑡∗ and the stacked ID embedding 𝑠∗ to generate the final stylized image.

- The generative process involves refining a random latent code through denoising steps, guided by the text and ID embeddings.

Figure. 1: Overview of the Stacked ID Embedding Pipeline. The process starts with image downloading and face detection, followed by ID verification, cropping and segmentation, and finally, captioning and marking. This pipeline ensures that the training data is of high quality and relevant to the task of identity embedding and image generation.

By following these steps, PhotoMaker can create high-quality, stylized images that maintain the subject’s identity while incorporating artistic elements from the text prompt. For a more in-depth understanding of the underlying mechanisms, refer to our previous article on generating realistic photos with PhotoMaker.

Customizing Realistic Human Photos Using AI With PhotoMaker

Integration of Additional Models

In this process, two specific models play a crucial role: the SDXL Unstable Diffusers model and the xl_more_art-full model. These models enhance the stylized image generation by providing a robust base and detailed artistic styles.

1. SDXL Unstable Diffusers Model

This model serves as the base for image generation. It is loaded into the pipeline and provides the foundational structure for the images.

2. xl_more_art-full Model

This model adds detailed artistic styles to the generated images. It is applied as a LoRA (Low-Rank Adaptation) weight to the base model.

The combined model improves the stylistic elements while preserving the identity features captured in the embeddings.

Implementation in Python

Install and Load Libraries

First, install and load all the necessary libraries for running PhotoMaker. This step ensures that you have all the dependencies needed to perform stylized image customization. Using accelerate not only optimizes training but also significantly reduces inference latency, making real-time applications more feasible.

# Capture the output of the commands to avoid cluttering the notebook

%%capture

# Install the PhotoMaker package directly from the GitHub repository

!pip install git+https://github.com/TencentARC/PhotoMaker.git

# Clone the PhotoMaker GitHub repository to the local environment

!git clone https://github.com/TencentARC/PhotoMaker.git

# Install the diffusers library for diffusion models, used for image generation

!pip install diffusers

# Install the accelerate library to optimize model training and inference

!pip install accelerate

# Install additional required packages listed in the requirements.txt file

!pip install -r requirements.txt

# Install the peft library for parameter-efficient fine-tuning of large models

!pip install peft

# Change to the PhotoMaker directory

%cd PhotoMaker/

import torch # Import PyTorch, used for tensor operations and model training/inference

import numpy as np # Import NumPy, used for numerical operations

import random # Import the random module, used for generating random numbers

import os # Import the OS module, used for interacting with the operating system

from PIL import Image # Import the Python Imaging Library (PIL) for image processing

import peft # Import PEFT, a HuggingFace library used for parameter-efficient fine-tuning

from diffusers.utils import load_image # Import utility function to load images from diffusers

from diffusers import EulerDiscreteScheduler, DDIMScheduler # Import schedulers for diffusion models

from huggingface_hub import hf_hub_download # Import function to download models and other assets from Hugging Face Hub

from photomaker import PhotoMakerStableDiffusionXLPipeline # Import the PhotoMakerStableDiffusionXLPipeline from the PhotoMaker package

import requests # Import requests library for making HTTP requests

Download Civitai Models

Civitai is a platform that provides a repository for sharing and downloading AI models, especially those related to image generation and editing. Here, we download two models: xl_more_art-full and SDXL Unstable Diffusers. Saving models in the safetensors format can significantly reduce memory overhead and improve loading times compared to traditional formats.

xl_more_art-full

The xl_more_art-full model is a specialized model designed for enhancing images with artistic elements. It applies artistic styles and transformations to input images. This model uses LoRA (Low-Rank Adaptation) to fine-tune the base model with additional artistic features.

api_token = "" # Your API token from https://civitai.com/ (create a new one in account settings)

model_version_id = "152309" # Model version ID

# API URL to download the model

url = f"https://civitai.com/api/download/models/{model_version_id}?type=Model&format=SafeTensor&size=pruned&fp=fp16"

# Headers including the API token

headers = {

"Authorization": f"Bearer {api_token}", # Authorization header with API token

"Content-Type": "application/json" # Content-Type header

}

os.makedirs("civitai_models", exist_ok=True) # Create the directory if it doesn't exist

response = requests.get(url, headers=headers) # Sending the request to download the model

if response.status_code == 200:

model_path = os.path.join("civitai_models", "xl_more_art-full.safetensors") # Define the model path

with open(model_path, "wb") as file: # Save the file

file.write(response.content)

print(f"Model version {model_version_id} downloaded successfully to {model_path}.")

else:

print(f"Failed to download the model. Status code: {response.status_code}, Response: {response.text}")

SDXL Unstable Diffusers

The SDXL Unstable Diffusers model is a diffusion model variant that generates high-quality images with enhanced stability. It incorporates advanced noise scheduling and denoising techniques to produce clearer and more detailed images. This model is the base for the stylized transformations applied by the xl_more_art-full model.

# Download the model from the specified URL and save it to the "civitai_models" folder

# with the filename "sdxlUnstableDiffusers_v11.safetensors"

!curl -Lo civitai_models/sdxlUnstableDiffusers_v11.safetensors https://civitai.com/api/download/models/276923

More articles worth reading:

Customizing Realistic Human Photos Using AI With PhotoMaker

Newsletter