Image Analysis with AI by Automating Captions, Detection and Similarity Search in Python

Recently, image analysis with AI has witnessed significant advancements, particularly driven by the rapid evolution of artificial computational technologies.

As a result, these innovations enable a more accurate and efficient interpretation of visual data, thus transforming the way we interact with digital images.

Furthermore, leading AI models such as BLIP for caption generation, YOLOv9 for object detection, and CLIP and ViT for similarity searches are at the forefront of this transformation.

This article aims to provide a comprehensive end-to-end solution that leverages the strengths of BLIP, YOLOv9, CLIP, and ViT for enhanced content image analysis with AI.

By integrating these models, we can create a powerful toolset for various applications, including automated caption generation, object detection, and similarity search, thereby enhancing the capabilities of current systems.

Furthermore, we leverage the power of open-source technologies to provide a cost-effective solution.

While proprietary technologies such as ChatGPT-4 Vision may offer more advanced capabilities, they often come with a higher cost.

Moreover, the use of open-source models democratizes access to cutting-edge AI tools, enabling a wider range of users to benefit from these innovations.

This article is structured as follows:

- Caption Generation with BLIB

- Object Detection with YOLOv9

- Semantic & Visual Similarity with CLIP and ViT

- Integrated end-to-end Python Code

- Applications, Limitations and Improvements

1. Caption Generation with BLIB

Caption generation is a key task in image content analysis, aiming to provide a concise textual description that captures the salient features and context of an image.

Salesforce Research developed BLIP, a model that leverages synergies between visual and linguistic modalities to produce accurate and contextually relevant captions, thereby setting a new standard in image captioning.

Moreover, the model actively distinguishes between correct and incorrect image-caption pairs, which enhances its ability to learn the intricate relationships between visual features and their corresponding textual descriptions.

1.1 Detailed Pre-training Process

1. Masked Language Modeling (MLM): In this task, The model randomly selects masked words and predicts them based on the remaining words’ context and the image’s visual content. Mathematically, this can be represented as:

Equation 1. This formula represents the Masked Language Model (MLM) loss, which is used during pretraining to enable the model to predict the probability of a masked word given the context of surrounding words and visual features.

where wi is the masked word, w–i represents the context words, v denotes the visual features, and P(wi∣w–i,v) is the probability of predicting the masked word given the context and visual features.

2. Image-Text Matching (ITM): This task aims to align the image and caption by predicting whether they match or not. The model actively distinguishes between correct and incorrect image-caption pairs. The loss function for this task is typically a binary cross-entropy loss:

Equation 2. This formula defines the Image-Text Matching loss, determining the model's ability to correctly align images with their captions.

Where y is the binary label indicating whether the image and caption match, s is the similarity score computed by the model, and σ is the sigmoid function.

The overall pre-training objective combines the MLM and ITM losses, strategically enhancing the model’s learning efficiency:

Equation 3. This formula shows the total pretraining loss as a sum of the Masked Language Model loss and the Image-Text Matching loss, combining textual and visual understanding.

After pre-training, the model can be fine-tuned on a smaller dataset of image-caption pairs for the specific task of caption generation.

See the original paper for further details:

- Li, J., Tang, J., Lu, J., & Li, J. (2022). BLIP: Bootstrapped Language-Image Pre-training for Vision-Language Foundation Models. arXiv preprint arXiv:2201.12086.

1.2 Implementing BLIP for Caption Generation

In the code snippet below, the BlipProcessor and BlipForConditionalGeneration classes from the transformers library are used to load the pre-trained BLIP model and its associated processor.

The get_image_caption function takes an image URL as input, preprocesses the image using the BLIP processor, generates a caption using the BLIP model, and decodes the caption from the model’s output.

This function can be used to generate captions for any given image, demonstrating the practical application of the BLIP model in image captioning tasks.

from PIL import Image

import requests

from transformers import BlipProcessor, BlipForConditionalGeneration

# Load model and processor

processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-base")

model = BlipForConditionalGeneration.from_pretrained("Salesforce/blip-image-captioning-base")

# Function to get image captions

def get_image_caption(image_url):

# Load image from URL

image = Image.open(requests.get(image_url, stream=True).raw)

# Preprocess the image and generate captions

inputs = processor(image, return_tensors="pt")

outputs = model.generate(**inputs)

caption = processor.decode(outputs[0], skip_special_tokens=True)

return caption

# Example usage

image_url = 'http://images.cocodataset.org/val2017/000000039769.jpg' # Replace with your image URL

caption = get_image_caption(image_url)

print("Generated Caption:", caption)

GENERATED CAPTION: a small dog wearing a sombrel hat

Figure. 1: Target image utilized as input for caption generation via the BLIP model.

2. Object Detection with YOLOv9

Object detection has significantly advanced with YOLOv9, building upon its predecessors’ strengths and introducing innovative features to enhance performance.

A feature of YOLOv9 is the implementation of Programmable Gradient Information (PGI). This feature allows the model to retain complete input information throughout the network, ensuring the integrity of the gradient information used for weight updates, crucial for the learning process.

The recently released YOLOv9 can be found in the GitHub repository of WongKinYiu. This repo provides access to the code, pretrained models, and instructions on how to use YOLOv9 for custom object detection tasks.

2.1 Advanced Features of YOLOv9

Programmable Gradient Information (PGI):

PGI addresses the information bottleneck issue in deep networks. It ensures that complete input information is retained throughout the network. The concept of PGI can be mathematically represented as follows:

Equation 4. Maintaining Information: The amount of information stays the same or decreases as it passes through layers of the network.

Where:

- I denotes mutual information.

- X is the input data.

- fθ and gϕ are transformation functions with parameters θ and ϕ, respectively.

PGI utilizes an auxiliary reversible branch to generate reliable gradients. This branch is built on the concept of reversible functions, where a function r has an inverse transformation v:

Equation 5. No Loss Transformation: The transformation ensures no information loss from the input.

Where rψ and vζ are reversible functions with parameters ψ and ζ.

Generalized Efficient Layer Aggregation Network (GELAN):

GELAN is designed based on gradient path planning, optimizing the balance between model complexity, detection precision, and computational efficiency.

The architecture of GELAN can be described as a combination of CSPNet and ELAN, where the key component is the Efficient Layer Aggregation (ELAN) block. The ELAN block can be mathematically represented as:

Equation 6. Layer Output: Each layer adds its transformation to the input for the next layer.

Where:

- Xl and Xl+1 are the input and output of the l-th ELAN block, respectively.

- Fθl+1 is the transformation function of the l-th layer with parameters θl+1.

Performance and Efficiency

YOLOv9 demonstrates improved performance across various model sizes (small, medium, large), with a significant reduction in parameters and computational demands. For instance, the YOLOv9-C model operates with 42% fewer parameters and 21% less computational demand compared to YOLOv7 AF, while achieving comparable accuracy.

For detailed technical information, please refer to the following paper:

- Wang, C.-Y., Yeh, I.-H., & Liao, H.-Y. M. (2024). YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv preprint arXiv:2402.13616

In the Python script below, we showcase YOLOv9’s object detection implementation. The script automates the setup process, downloads pre-trained weights, and processes a sample image to detect objects. Results are then displayed, illustrating YOLOv9’s practical application.

Please note that while we are using it on a sample image, the model can be used on video files and live video streams. Refer to this notebook for further details on using YOLOv9 with video.

Getting Started With The Recently Announced YOLOv9

import subprocess

import os

import requests

from PIL import Image

# Define a function to download a file using requests

def download_file(url, destination):

response = requests.get(url)

with open(destination, 'wb') as file:

file.write(response.content)

# Clone the YOLOv9 repository and install dependencies

if not os.path.exists('yolov9'):

subprocess.run(['git', 'clone', 'https://github.com/SkalskiP/yolov9.git'])

subprocess.run(['pip', 'install', '-r', 'yolov9/requirements.txt'])

# Create directories for weights and data

os.makedirs('yolov9/weights', exist_ok=True)

os.makedirs('yolov9/data', exist_ok=True)

# Download YOLOv9 model weights

download_file('https://github.com/WongKinYiu/yolov9/releases/download/v0.1/yolov9-c.pt', 'yolov9/weights/yolov9-c.pt')

# Download a sample image

sample_image_url = 'http://images.cocodataset.org/val2017/000000039769.jpg'#https://github.com/ultralytics/yolov5/raw/master/data/images/bus.jpg'

sample_image_path = 'yolov9/data/bus.jpeg'

download_file(sample_image_url, sample_image_path)

# Function to perform object detection and display the results

from IPython.display import Image as IPythonImage

def detect_and_display(image_path, weights_path='yolov9/weights/yolov9-c.pt', conf_threshold=0.1):

# Run the YOLOv9 detection

result = subprocess.run(['python', 'yolov9/detect.py', '--weights', weights_path, '--conf', str(conf_threshold), '--source', image_path, '--device', 'cpu'], capture_output=True, text=True)

print(result.stdout)

print(result.stderr)

# Find the latest run directory

latest_run_dir = sorted(os.listdir('yolov9/runs/detect'), key=lambda x: os.path.getmtime(os.path.join('yolov9/runs/detect', x)))[-1]

# Construct the path to the result image

result_image_path = os.path.join('yolov9', 'runs', 'detect', latest_run_dir, os.path.basename(image_path))

print(result_image_path)

# Display the resulting image using IPython.display.Image

display(IPythonImage(filename=result_image_path, width=600))

# Example usage

detect_and_display(sample_image_path)

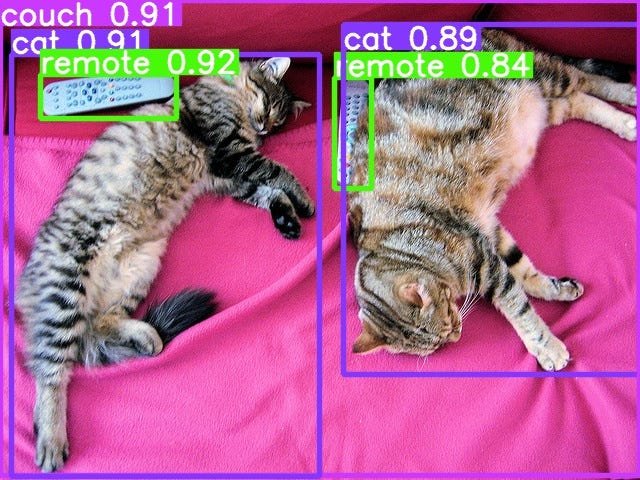

Figure. 2: Example of Object Detection: A cat and a remote detected on a couch, demonstrating the precision and practical application of YOLOv9's object detection capabilities.

3. Semantic & Visual Similarity with CLIP and ViT

Today, semantic and visual similarity tasks are crucial for understanding and organizing the vast amounts of visual data, thereby playing a pivotal role in data management.

These tasks leverage deep learning models to find similar images either based on the content’s semantic meaning or visual appearance.

Two prominent models are CLIP developed by OpenAI, and Vision Transformer (ViT), which applies transformer architectures to image classification tasks.

3.1 Semantic Search with CLIP

Importantly, CLIP is designed to understand images in the context of natural language descriptions. It is trained on a variety of image-text pairs, learning to associate images with their textual descriptions.

Significantly, the model consists of two main components: a text encoder and an image encoder, which map text and images into a shared high-dimensional space where semantic similarity corresponds to proximity.

The training objective is to maximize the cosine similarity between the correct pairs of images and text while minimizing it for incorrect pairs. Mathematically, this can be expressed as:

Equation 7. This formula is a type of loss function that is central to models learning from image-text pairings. It calculates how well the model matches an image with its text description compared to other possible matches in the data set.

where L is the loss, sim(I,T) is the cosine similarity between the image I and text T embeddings, and τ is a temperature parameter that scales the similarity scores.

3.2 Visual Similarity with ViT

Additionally, ViT applies the transformer architecture to image classification tasks. It treats an image as a sequence of fixed-size patches, linearly embedding each of them, and then processes the sequence with a standard transformer encoder.

Positional embeddings are added to retain positional information. The self-attention mechanism in transformers allows ViT to weigh the importance of different patches when classifying an image, which is inherently useful for identifying similar images. The transformer’s output for a patch can be formulated as:

Equation 8. This formula depicts an attention mechanism used in neural networks to weigh the importance of different pieces of information. It helps the model to focus on the most relevant parts of the data to make better predictions or generate more accurate outputs.

Where Q, K, and V represent the query, key, and value matrices derived from the input embeddings, and dk is the dimensionality of the key.

3.3 Semantic & Visual Similarity Search

Combining CLIP and ViT allows for a comprehensive approach to searching for semantically and visually similar images.

Furthermore, CLIP provides the capability to find images that are semantically related to a given text query or another image, while ViT enhances the ability to recognize patterns and details within the images themselves.

For a comprehensive understanding of CLIP and ViT, please refer to the following papers:

- Radford, A., et al. (2021). Learning Transferable Visual Models From Natural Language Supervision. arXiv preprint arXiv:2103.00020.

- Dosovitskiy, A., et al. (2020). An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. arXiv preprint arXiv:2010.11929.

The code snippet below uses the CLIP model with the ViT for identifying semantically and visually similar images. The initialize_clip_model function preps the AI model, while fetch_images pulls data from Pixabay’s API. The perform_semantic_search function evaluates images’ relevance to text phrases, and perform_visual_search.

import os

os.environ['LC_ALL'] = 'C.UTF-8'

os.environ['LANG'] = 'C.UTF-8'

!pip install sentence-transformers

!pip install -q transformers ipyplot==1.1.0 ftfy

from PIL import Image

import requests

from transformers import CLIPProcessor, CLIPModel

import torch

from io import BytesIO

import ipyplot

from sentence_transformers import util

def initialize_clip_model():

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

return model, processor

def fetch_images(api_key, search_keyword, number_to_retrieve):

api_url = f"https://pixabay.com/api/?key={api_key}&q={search_keyword.lower()}&image_type=photo&safesearch=true&per_page={number_to_retrieve}"

response = requests.get(api_url)

output = response.json()

images = [Image.open(BytesIO(requests.get(item['webformatURL']).content)).convert("RGB") for item in output["hits"]]

return images

def perform_semantic_search(model, processor, phrase, images, top_k = 3):

with torch.no_grad():

inputs = processor(text=[semantic_search_phrase], images=all_images, return_tensors="pt", padding=True)

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image

probs = logits_per_image.softmax(dim=1)

values, indices = logits_per_image.squeeze().topk(top_k) # Top-3

top_images, top_scores = [], []

for score, index in zip(values, indices):

top_images.append(images[index.item()]) # Convert tensor to integer

top_scores.append(round(score.item(), top_k))

return top_images, top_scores

def display_images(images, scores):

print(f"Scores: {scores}")

ipyplot.plot_images(images, img_width=300)

def perform_visual_search(model, processor, target_image_url, candidate_images, top_k=3):

# Load the target image

target_image = Image.open(requests.get(target_image_url, stream=True).raw).convert("RGB")

# Preprocess the target image and candidate images

target_inputs = processor(images=target_image, return_tensors="pt")

candidate_inputs = [processor(images=img, return_tensors="pt") for img in candidate_images]

# Compute embeddings for the target image and candidate images

with torch.no_grad():

target_outputs = model.get_image_features(**target_inputs)

candidate_outputs = [model.get_image_features(**inputs) for inputs in candidate_inputs]

# Compute cosine similarities

similarities = [util.cos_sim(target_outputs, output).item() for output in candidate_outputs]

# Sort the candidate images based on their similarity scores

sorted_indices = sorted(range(len(similarities)), key=lambda i: similarities[i], reverse=True)

top_images = [candidate_images[idx] for idx in sorted_indices[:top_k]]

top_scores = [similarities[idx] for idx in sorted_indices[:top_k]]

return top_images, top_scores

# Main execution

model, processor = initialize_clip_model()

# Setup search query

pixabay_api_key = "22176616-358d1b190a298ff59f96b35a1" # Use your actual API key

semantic_search_phrase = "small dog with hat"

pixabay_search_keyword = "dog with hat"

no_to_retrieve = 100

all_images = fetch_images(pixabay_api_key, pixabay_search_keyword, no_to_retrieve)

print("Total number of images retrieved from Pixabay: ", len(all_images))

print("Images retrieved from Pixabay:")

ipyplot.plot_images(all_images, max_images=no_to_retrieve, img_width=150)

print("Images with The highest Semantic Similarity")

top_images, top_scores = perform_semantic_search(model, processor, semantic_search_phrase, all_images)

display_images(top_images, top_scores)

# URL of the target image for visual similarity search

target_image_url = "https://i.ibb.co/zfmc38w/istockphoto-152514029-612x612.jpg"

# Perform visual similarity search based on the target image

print("Images with the highest Visual Similarity:")

top_visual_images, top_visual_scores = perform_visual_search(model, processor, target_image_url, all_images, top_k=3)

display_images(top_visual_images, top_visual_scores)

Figure. 3: Top matched images for a small dog with a hat, showcasing results from a semantic and visual similarity search using the CLIP and ViT models.

4. Integrated end-to-end Code

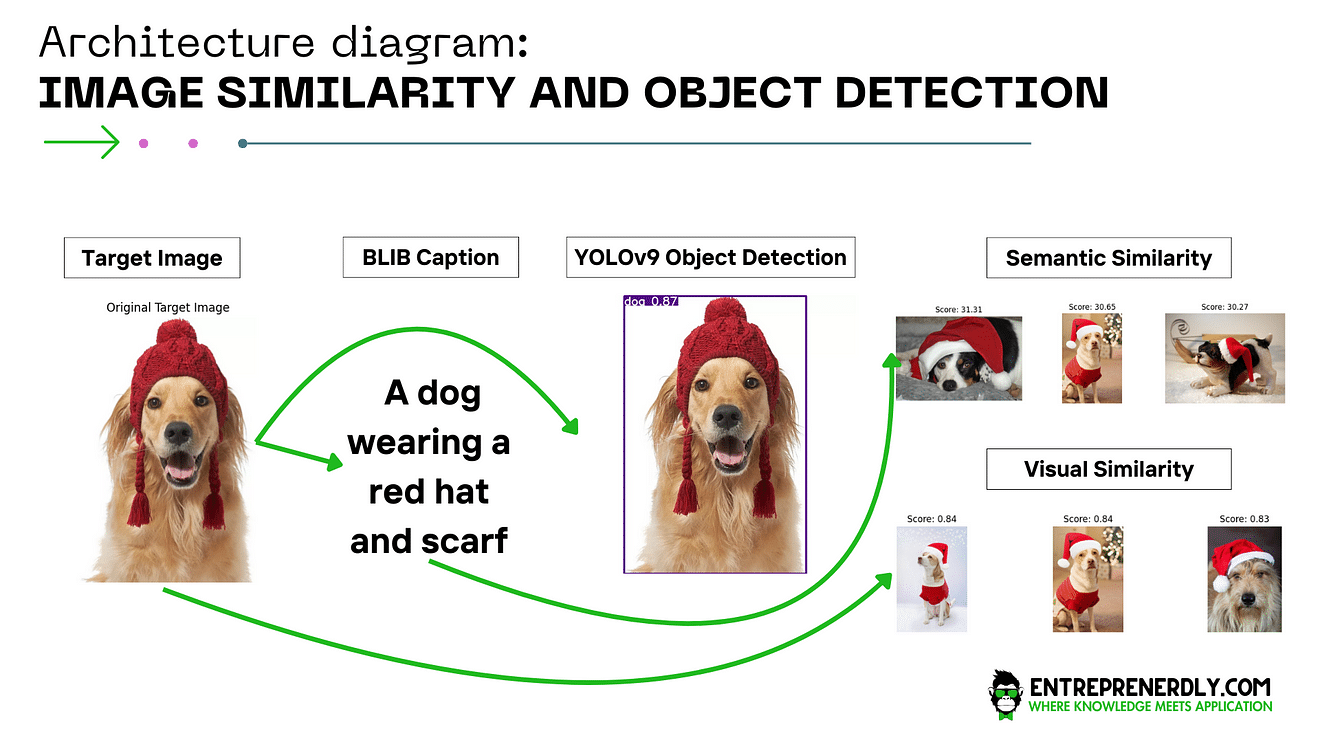

Seamlessly, we unite the functionalities of BLIP for image captioning, YOLOv9 for object detection, and CLIP with Vision Transformer (ViT) for semantic and visual similarity searches into an integrated solution.

Firstly, the fetch_images function retrieves a collection of images from Pixabay, providing a rich dataset for analysis. This is based on a search query which could be based on the Caption generated.

Please note that while we are using Pixabay here, you could use a much more relevant database of images according to your needs. Let’s proceed.

The initialize_clip_model function prepares the CLIP model for processing these images. Meanwhile, the get_image_caption function uses BLIP to generate a contextual caption for a given image, and the detect_and_display function utilizes YOLOv9 to identify and visualize objects within the image.

Once the images are preprocessed, the perform_searches function kicks in, conducting both semantic and visual searches. It compares the generated captions and visual content of images against the target image, ranking them based on relevance and similarity.

Finally, the display_images function provides a visual output of the results, displaying both the top semantic and visual matches, thereby highlighting the convergence of NLP and image processing in a singular analytical narrative.

The architecture can be represented as follows:

Figure. 4: Architecture Diagram for End-to-End Image Caption Generation, Object Detection and Similarity Search.

Newsletter