Restoring, Colorizing and Inpainting

AI Face Restoration technology enhances and recovers facial images from various states of degradation. This technology restores clear visuals from once-flawed images.

This article discusses how to practically use AI Face Restoration with CodeFormer, a Python tool specialized in complex tasks such as resolution enhancement, colorization, and inpainting.

CodeFormer uses deep learning to analyze and mend defects like blurs and scratches on faces in images, thereby enhancing photo quality.

In this process, AI Face Restoration technology analyzes images, identifies imperfections, and fills in missing or corrupted data while respecting the original photo’s aesthetics.

This article is structured as follows:

- Theoretical Foundations of AI Face Restoration

- Python Implementation with CodeFormer

- Extending to Colorization and Inpainting

1. Theoretical Foundations of Face Restoration

1.1 Overview of Blind AI Face Restoration Challenges

Blind AI face restoration challenges include recovering high-quality facial images from degraded inputs, complicated by the unknown nature of the degradation. The authors of CodeFormer explain this challenge by discussing how a single low-quality (LQ) input can lead to an infinite number of high-quality (HQ) outputs. This significantly complicates the AI Face restoration task.

1.2 Deep Learning and Codebook Priors

Modern face restoration techniques employ deep learning at their core. Specifically, convolutional neural networks (CNNs) and now, Transformers, which are adept at handling complex image data. In the paper’s approach, the researchers use a learned discrete codebook to minimize the uncertainty of mapping LQ inputs to HQ outputs. This method uses a finite set of ‘visual atoms’ or codes to reconstruct faces with high fidelity, differing from traditional continuous models.

Equation 1. This formula shows how to decode high-quality (HQ) images from low-quality (LQ) inputs using a learned codebook.

Here, LQ denotes the low-quality input face, 𝐶𝑜𝑑𝑒𝑏𝑜𝑜𝑘 represents the learned discrete codes, and 𝐷𝑒𝑐𝑜𝑑𝑒𝑟 is the function reconstructing the HQ output from these codes.

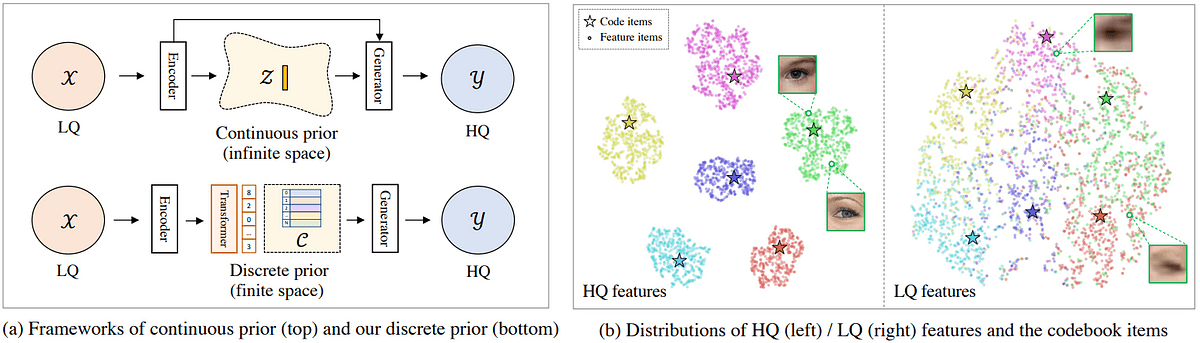

Figure 1 compares the effectiveness of discrete priors with traditional continuous methods. The purpose is to highlight the enhanced fidelity and fewer artifacts of discrete priors.

Figure. 1: Face Restoration with Discrete and Continuous Priors. (a) Shows the comparison of traditional continuous prior frameworks (top) against the discrete codebook prior approach (bottom). (b) Provides a t-SNE visualization of high-quality (HQ) and low-quality (LQ) features alongside codebook items. This shows how the CodeFormer model efficiently bridges the gap between degraded inputs and restored high-quality outputs. Source: https://arxiv.org/abs/2206.11253

1.3 Transformer-Based Code Prediction: CodeFormer

Initially, AI Face Restoration trains a vector-quantized autoencoder to develop a strong codebook. The goal is to capture essential facial features.

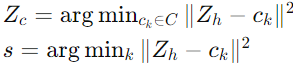

Equation 2. This pair of equations works together within the CodeFormer framework: the first identifies the closest codebook vector (ck) that minimizes the squared Euclidean distance to the high-quality embedded features (Zh). This provides the best matching vector (Zc). The second determines the specific index (s) of this vector within the codebook, which corresponds to the minimum distance. This effectively selects the optimal code representation for the image restoration process.

Where 𝑍ℎ represents embedded HQ features, 𝐶 is the codebook, 𝑐𝑘 are codebook vectors, and 𝑠 is the sequence of selected codes. The trained autoencoder reduces the high-dimensional image space into a manageable set of codes. This is to effectively describe facial features.

1.4 Restoration with Transformer-Based Prediction

Subsequently, a transformer module processes these encoded features and predicts the best sequence of codes to restore the original HQ face. This prediction goes beyond simple reconstruction from LQ inputs. It’s intelligently based on global image context and local feature degradation:

Equation 3. This equation describes one step of the Transformer's attention mechanism. The next state (Xs+1) is calculated as a weighted sum of value vectors (Vs), adjusted by the softmax of the product of query (Qs) and key (Ks) matrices, plus the previous state (Xs).

Where 𝑋𝑠 is the state of the Transformer at step 𝑠, and 𝑄𝑠,𝐾𝑠,𝑉𝑠 are the query, key, and value matrices derived from the previous state, respectively.

Figure. 2: AI Face Restoration Process Using CodeFormer. (a) Illustrates the high-quality (HQ) encoding process using a vector-quantized autoencoder to create a discrete codebook which simplifies image data into essential facial features. Nearest-Neighbor Matching is used to select the best match from the codebook. (b) Depicts the low-quality (LQ) restoration workflow where a Transformer predicts the best sequence of codes from the codebook to reconstruct the HQ image. Additionally, a Controllable Feature Transformation (CFT) module adjusts the information flow, enhancing the quality and fidelity of the restored image based on the degradation level of the input. Source: https://arxiv.org/abs/2206.11253

1.5 AI Face Restoration Through Feature Transformation

Furthermore, a controllable feature transformation module boosts the adaptability of AI Face Restoration by adjusting the influence of LQ features on the output. This mechanism tailors the restoration process to different degrees of input degradation. The transformation can be mathematically expressed as:

Equation 4. This formula explains the controllable feature transformation where the output features (F') are adjusted by a weighted combination of encoder features (Fe) scaled and shifted by parameters α and β, added to the decoder features (Fd). The weight factor (w) controls the influence of encoded features on the transformation.

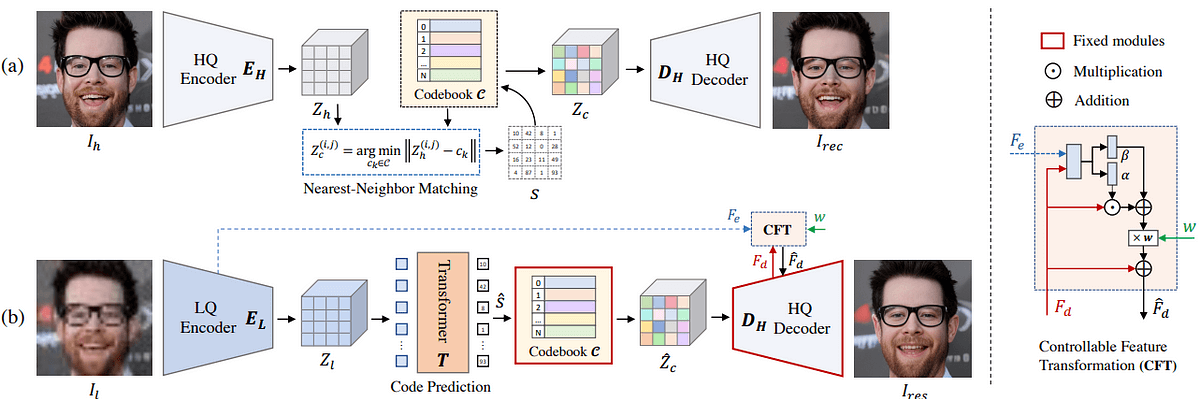

Where 𝐹𝑑 and 𝐹𝑒 represent the decoder and encoder features, respectively. α and β are affine transformation parameters. Finally, w is a weight factor that modulates the contribution of encoded features. Figure 3 illustrates how adjusting 𝑤 influences the balance between fidelity and quality.

Figure. 3: Adjusting Quality and Fidelity in AI Face Restoration. This image series showcases the effects of varying the parameter 'w' in CodeFormer's feature transformation module. Starting from 'w = 0' which emphasizes quality, to 'w = 1' which enhances fidelity (identity). Source: https://arxiv.org/abs/2206.11253

1.6 Demonstrating Effectiveness and Applications

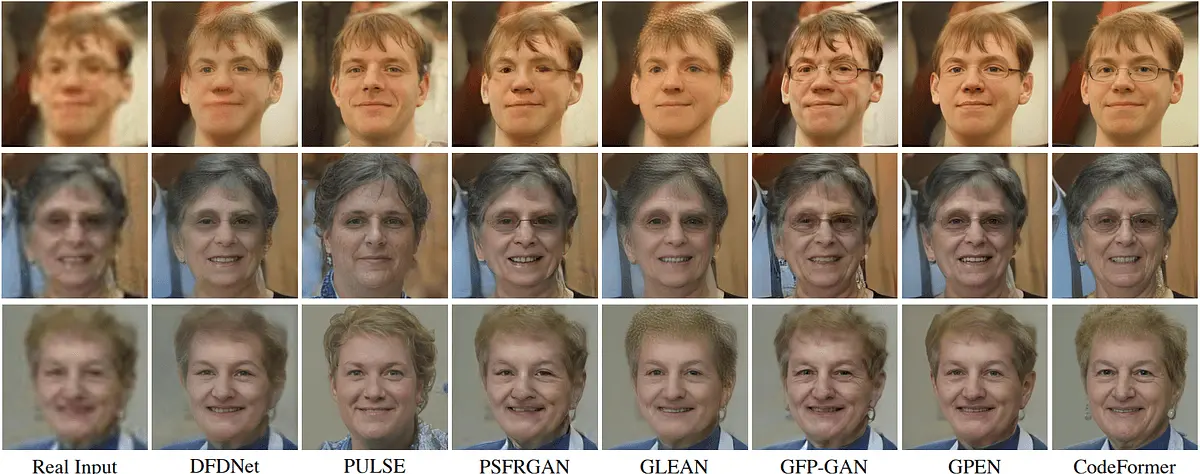

The figure below shows the comparative results across multiple methods. It demostrates the superior quality and fidelity achieved by CodeFormer against other methods that utilize continuous generative priors. Moreover, the AI Face Restoration to various degradations demonstrates both its efficacy and also highlights its broad potential applications.

Figure. 4: Comparative Analysis of Face Restoration Techniques. This image array compares the restoration outcomes from various advanced methods including DFDNet, PULSE, PSFRGAN, GLEAN, GFP-GAN, GPEN, and CodeFormer. Each row displays results from different input conditions, showcasing CodeFormer's capability to deliver high-quality and faithful restorations across varying levels of degradations. Source: https://arxiv.org/abs/2206.11253

If you’d like to know more about the CodeFormer methodology, please refer to the original paper published by the authors:

Zhou, S., Zhang, J., Wang, X., Zuo, W., & Zhang, L. (2022). Towards Robust Blind Face Restoration with Codebook Lookup Transformer. arXiv preprint arXiv:2206.11253. Retrieved from https://arxiv.org/abs/2206.11253

2. Python Implementation

To proceed, ensure you have access to a decent GPU for this task. Moreover, we recommend using Google Colab. This environment provides access to a Tesla T4 GPU.

Below, you’ll find the code that you can directly implement in Google Colab to set up the environment and prepare for the restoration tasks.

2.1 Setting Up the Environment

The initial setup requires preparing a working directory and installing necessary dependencies:

- Firstly, navigate to the primary working directory in a Google Colab environment.

- Next, ensure a clean setup by removing any existing CodeFormer directories.

- Then, clone the CodeFormer repository from GitHub to acquire the latest version.

- Install all dependencies listed in ‘requirements.txt’ to set up the Python environment correctly.

- Additionally, install the ‘basicsr’ library, essential for super-resolution tasks. It provides various utilities for image and video enhancement that you’ll use alongside CodeFormer to improve the resolution and quality of images.

- Download necessary pre-trained models for face library handling and the main CodeFormer model using provided scripts. Specifically, ‘facelib’ is crucial for handling facial features accurately during the restoration process. It includes pre-trained models that assist in detecting and analyzing facial components.

# Hide installations outputs

%%capture

# Change the working directory to '/content' for the Google Colab

%cd /content

# Remove any existing CodeFormer directory to ensure a clean setup

!rm -rf CodeFormer

# Clone the CodeFormer repository from GitHub and get the latest version of the code

!git clone https://github.com/sczhou/CodeFormer.git

# Change the working directory to the newly cloned CodeFormer folder

%cd CodeFormer

# Install all the required Python libraries listed in 'requirements.txt'. This file contains a list of packages necessary for the project to run.

!pip install -r requirements.txt

# Install the 'basicsr' library, which is a basic super-resolution toolkit needed for the CodeFormer to function.

# The 'develop' setup means that the library is set up in a way that allows for easy modification and testing.

!python basicsr/setup.py develop

# Download necessary models for face library handling from a script provided in the repository. These models are essential for facial feature analysis and manipulation.

!python scripts/download_pretrained_models.py facelib

# Download the pre-trained CodeFormer model which is trained specifically for the task of face restoration. This allows the use of advanced AI techniques to improve the quality of face images directly.

!python scripts/download_pretrained_models.py CodeFormer

2.2 Loading Images to Restore

- Initially, import the necessary Python libraries for file handling.

- Subsequently, prepare an upload directory to store images, utilizing Google Colab’s file upload utility for handling the process.

- Move uploaded files to the designated directory for batch inference.

# Import necessary libraries

import os # Library for interacting with the operating system

from google.colab import files # Special module from Google Colab for file upload/download

import shutil # Library for high-level file operations

# Set the path for the upload folder where the uploaded images will be stored

upload_folder = 'inputs/user_upload'

# Check if the directory already exists

if os.path.isdir(upload_folder):

shutil.rmtree(upload_folder) # If the directory exists, remove it and all its contents

os.mkdir(upload_folder) # Create a new directory with the path specified in upload_folder

# Use the Colab function to upload files. This will prompt the user to upload files from their local machine.

uploaded = files.upload()

# Loop through the files that were uploaded

for filename in uploaded.keys():

dst_path = os.path.join(upload_folder, filename) # Create the full destination path

print(f'move {filename} to {dst_path}') # Print a message about the file movement

shutil.move(filename, dst_path) # Move the file from its initial upload path to the specified directory

2.3 Running Face Restoration Inference

In this section, you will configure and execute the face restoration process using CodeFormer, with options to enhance the background and apply upsampling to the restored faces.

This allows you to tailor the restoration to meet specific quality requirements or to focus on particular aspects of the image. For example, enhancing facial details or the image background.

Setting Up Inference Parameters

Before running the restoration, you need to set several parameters that dictate how the restoration process behaves:

- Fidelity and Quality Balance (

CODEFORMER_FIDELITY): This parameter helps you find the right balance between maintaining the original identity in the image (higher fidelity) and improving the visual quality (lower fidelity). Adjusting this slider allows you to prioritize either aspect according to your specific needs. - Background Enhancement (

BACKGROUND_ENHANCE): This toggle enables the use of the Real-ESRGAN AI model to enhance the background of the images. Real-ESRGAN is specifically designed to improve image resolution and detail. Combining CodeFormer with Real-ESRGAN is especially useful for images where the background is as significant as the foreground. - Face Upsampling (

FACE_UPSAMPLE): By enabling this option, the restored faces undergo additional upsampling to increase their resolution for high-quality outputs.

Also worth reading:

Fine-Tuning Your Own Custom Stable Diffusion Model With Just 4 Images

Newsletter