An In-Depth End-to-End Tutorial for Structuring Raw Data into Knowledge-Driven AI Chatbots

In this article, we skillfully combine the structured, relationship-focused architecture of knowledge graphs with the sophisticated language understanding abilities of LLMs.

Knowledge graphs contribute a layer of structured data, rich in relationships and context, enabling LLMs to navigate and interpret information pools with higher accuracy and less hallucination.

Our objective is to provide readers with a comprehensive, end-to-end guide on developing their knowledge graphs facilitated by the capabilities of LLMs.

We will demonstrate how to 1) transform unstructured text data into a structured and queryable format, 2) Index the data into a knowledge Graph using llama-index and neo4j, 3) Query the database using natural language with Text2Cypher.

Finallly, we will visualize the query results using an interactive Network Graph using Pyvis with a Natural Language response. We will use a complex unstructured Supply Chain data to derive complex entities and relationships.

2. Key Fundamental Concepts

2.1 Knowledge Graphs and Cypher

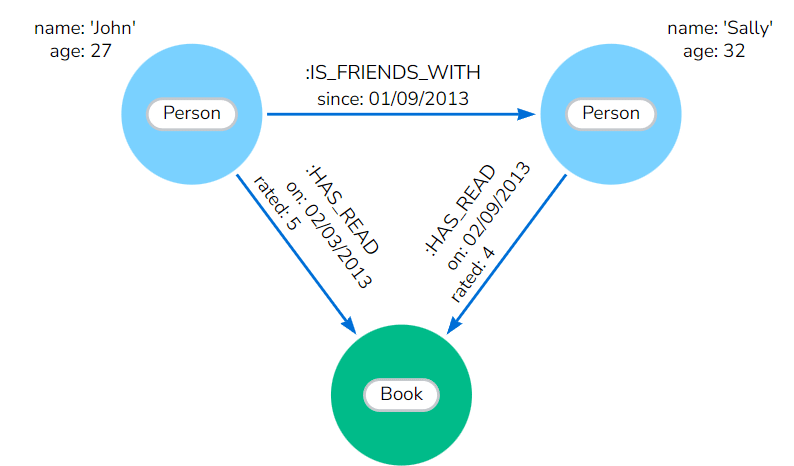

Importantly, knowledge graphs store information sets in an interconnected, graph-like format. They consist of nodes (representing entities like people, places, things) and edges (representing relationships between these entities).

Moreover, this structure proves highly effective for representing and navigating complex relationships and hierarchies, thereby making it invaluable for tasks like semantic search.

When it comes to interacting with knowledge graphs, the Cypher query language is the tool. Cypher emphasizes pattern matching to efficiently navigate data relationships, unlike the table-focused SQL.

For example, for the graph database above, we could query to return all friends of a person named ‘John’:

MATCH (john:Person {name: 'John'})-[:IS_FRIENDS_WITH]->(friends)

RETURN john, friends

Output:

john: {

name: "John",

age: 27

},

friends: {

name: "Sally",

age: 32,

IS_FRIENDS_WITH: {

since: "01/09/2013"

}

}

However, a notable challenge is that fewer developers are familiar with Cypher compared to other languages like SQL. This means that fewer developers are familiar with its syntax and capabilities.

To bridge this gap, our tech stack will include Text2Cypher — a tool that translates natural language questions into Cypher queries.

2.2 Large Language Models

Moreover, LLMs effectively bridge the gap by translating natural language into database queries for knowledge graphs and converting database responses into comprehensive narratives.

Furthermore, LLMs will assist in processing raw text into structured entity-relationship data, crucial for indexing into new knowledge graphs.

2.3 Graph Retrieval Augmented Generation

Additionally, the Graph RAG process involves pinpointing relevant entities and connections from a prompt, followed by sourcing related subgraphs from the knowledge graph to inform the LLM’s context.

Moreover, this approach is especially valuable in domains demanding precision and specialized knowledge, as it significantly enhances the LLM’s capacity to offer detailed and accurate information rooted in the structured data of knowledge graphs.

Graph RAG thus helps overcome some of the limitations inherent in LLMs, such as their tendency to produce convincing yet inaccurate content due to the lack of up-to-date or private information in their training datasets.

3. Building a KG RAG System with LLMs

3.1 Text2Cypher

Text2Cypher seamlessly translates a query like “What’s the relationship between Foo and Bar?”

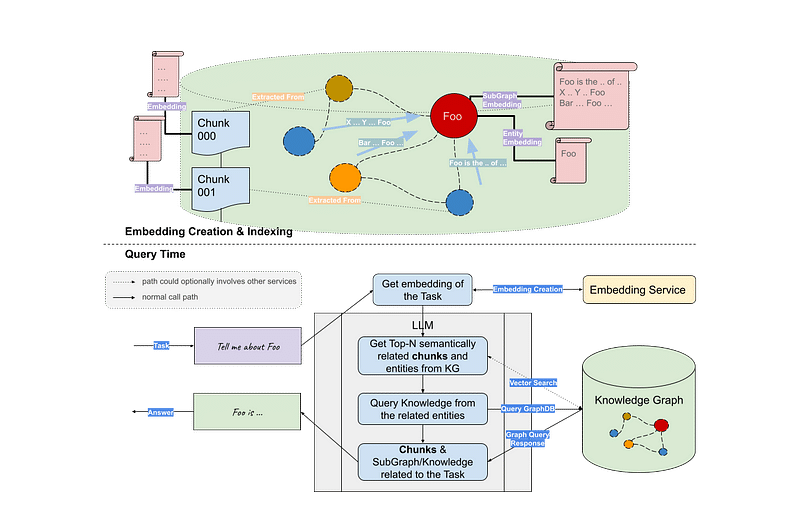

Figure. 2: This diagram illustrates how a user's natural language question is interpreted by an LLM and converted into a structured graph database query, which in turn results in a clear, informative response from the knowledge graph.

Furthermore, this feature, seamlessly integrated within the llama_index library, empowers users to articulate their queries in plain English, which are then automatically converted into the formal structure required for Cypher queries.

3.2 Building a Knowledge Graph with LLMs

A knowledge graph can be initially constructed from a trove of raw text data which can be significantly streamlined using LLMs.

The procedure unfolds as follows:

- Data Acquisition: LLMs parse raw data, identifying key entities and relationships for the knowledge graph.

- Knowledge Extraction: Analyzing data, LLMs extract and categorize entities, relationships, and attributes to form graph nodes and edges.

- Transformation: LLMs convert extracted knowledge into a graph-database-ready format, potentially creating intermediary commands for node and relationship creation.

- Loading: The processed data is loaded into the knowledge graph, establishing a network that represents complex data interrelations.

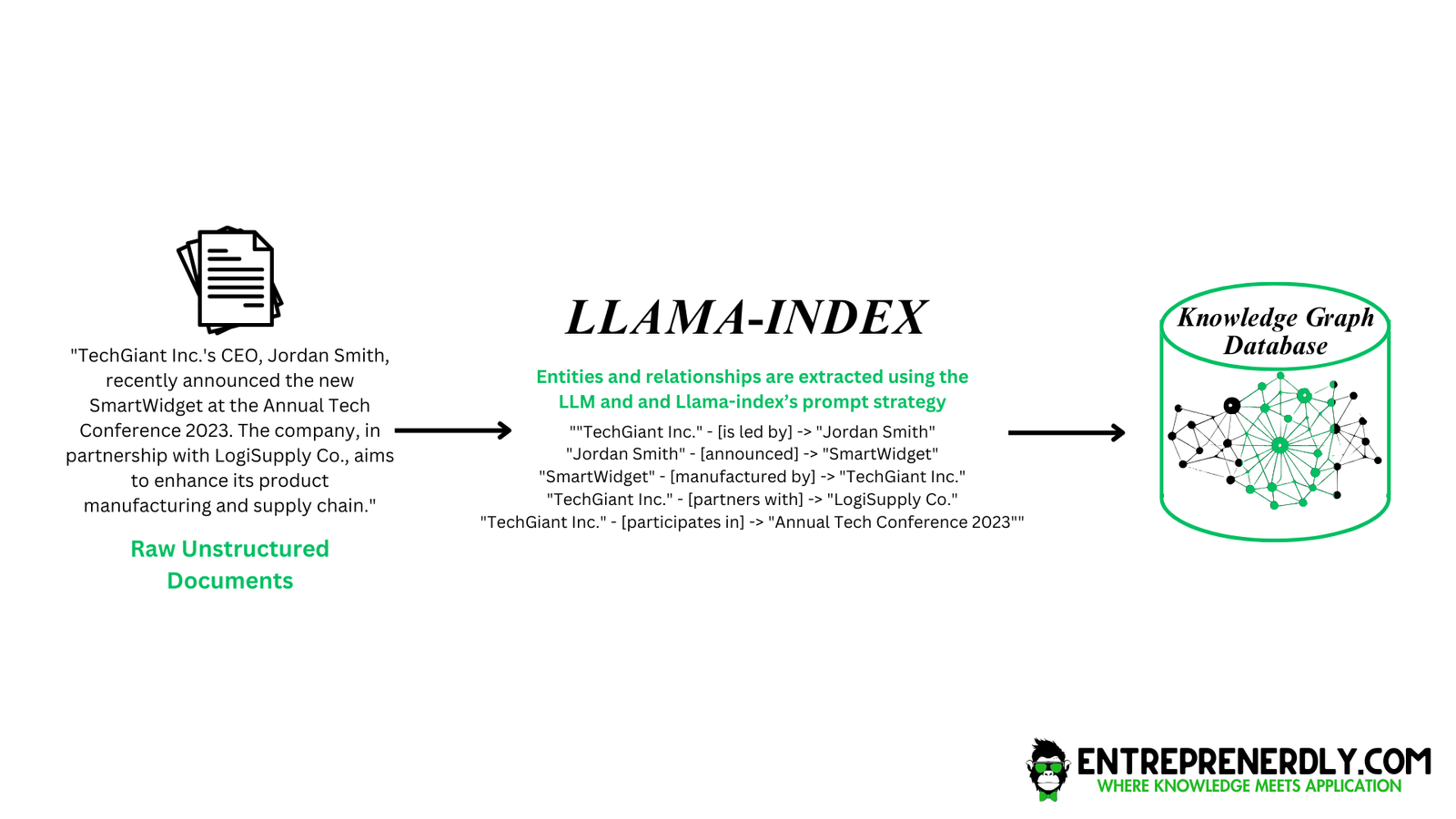

Figure. 3: LLM-Enhanced ETL Process: This diagram depicts the role of a fine-tuned LLM in orchestrating an ETL pipeline, from extracting entities and relationships from raw data to structuring them into a knowledge graph.

Throughout this process, the LLM actively serves not only as an extractor of information but also as a decision-maker that refines the knowledge extraction approach, thereby ensuring the data is transformed in a manner conducive to insightful querying and context-aware analysis.

3.3 General Graph RAG Architecture

The below diagram vividly illustrates the operational flow of a Graph RAG system. The architecture initiates with the input task or question and proceeds as follows:

- Task Embedding: Converts natural language tasks into vector embeddings to capture semantic meanings.

- Retrieval from KG: Searches the Knowledge Graph for the most relevant data segments using the task embedding.

- Subgraph Construction: Builds a focused subgraph from the Knowledge Graph based on the retrieved information.

- Answer Generation: The LLM uses the subgraph to formulate context-aware responses.

- Chunk Embedding and Response: Embeds answer components for semantic alignment with the task embedding.

This graph-centric approach not only augments the LLM’s understanding by providing it with a rich set of relationship-based context but also streamlines the retrieval process, making it more efficient and precise.

4. Python Implementation

4.1 Setting Up Credentials and Libraries

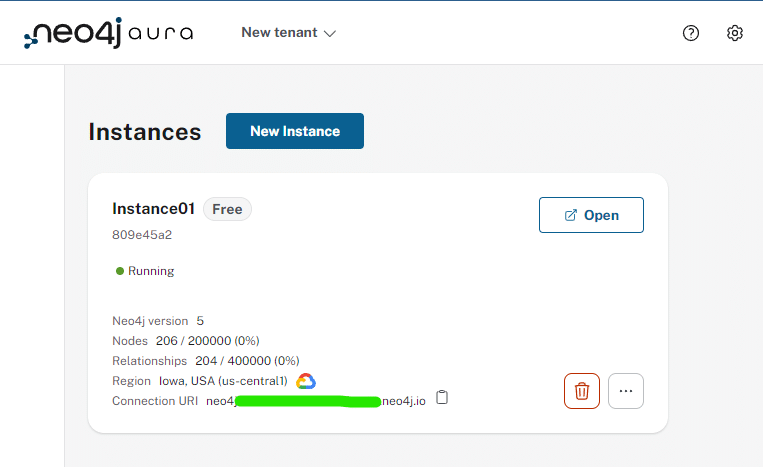

To get started with Neo4j and OpenAI, we’ll need to create an account and set up the Python environment:

1. Create an account on Neo4j Aura by visiting their signup page. Choose the ‘Free’ tier for small projects, which provides 1 GB of memory and enough nodes and relationships to get started.

2. Following the instance launch, Neo4j Aura actively provides the credentials. Remember to save the password securely, as it won’t be displayed again.

3. For OpenAI, we’ll need to obtain an API key by signing up on the OpenAI API platform.

4. In Python, ensure you have the necessary libraries installed. If not, use the following command:

!pip install requests llama_index pyvis openai

5. Import the libraries and aet up the credentials in the Python environment. Replace the placeholders with the actual credentials

from llama_index import (

KnowledgeGraphIndex,

LLMPredictor,

ServiceContext,

SimpleDirectoryReader,

)

from llama_index.storage.storage_context import StorageContext

from llama_index.graph_stores import Neo4jGraphStore

from llama_index.llms import OpenAI

from IPython.display import Markdown, display

import logging

import sys

from llama_index.llms import OpenAI

from llama_index import ServiceContext

import os

from llama_index import global_service_context

from llama_index.query_engine import KnowledgeGraphQueryEngine

from pyvis.network import Network

import requests

# Setup Open AI API Key

os.environ["OPENAI_API_KEY"] = ""

# Prepare for Neo4j with credentials

username = "neo4j"

password = ""

url = ""

4.2 Raw Data and Preprocessing

We focus now on the raw data for building our knowledge graph. The data we’re working with is fictional, yet mirrors the complexities of a real-world Supply Chain. Created with an LLM, it represents ‘SureSource Inc.’, a diversified tech firm with a global presence and an extensive network of over 1,000 partners.

Furthermore, the dataset encompasses various elements of their operations, from semiconductor sourcing to partner relationships across multiple countries for multiple components. This dataset serves as the foundation for our knowledge graph.

4.2.1 Retrieve Raw Data

Moreover, the provided code efficiently downloads the supply chain txt file from Google Drive using a direct link, ensuring a smooth data acquisition process . It creates a designated directory for data storage if it doesn’t exist, then proceeds to download and save the file from Google Drive into this directory.

The SimpleDirectoryReader is a class from the llama_index package used to read and load text data from a specified directory into memory. The SimpleDirectoryReader object then reads all .txt files within the ‘data’ directory.

To index additional .txt files, they should be included in the ‘data’ folder. The class constructs a documents object, which is a collection or list of document data that can be used for building or updating the knowledge graph index.

# URL for the Google Drive file to download

file_id = '1QKKTT_dSdAM4b2XBx4R-HFCCStc3u3_R'

download_url = f"https://drive.google.com/uc?id={file_id}&export=download"

# Directory path where the file will be saved

directory_path = 'data'

file_path = os.path.join(directory_path, 'SureSource Inc Supply Chain.txt')

# Make sure the directory exists, otherwise create it

os.makedirs(directory_path, exist_ok=True)

# Download the file

response = requests.get(download_url)

if response.status_code == 200:

with open(file_path, 'wb') as file:

file.write(response.content)

print("File downloaded successfully.")

else:

print(f"Failed to download file: {response.status_code}")

# Create the documents object

documents = SimpleDirectoryReader(directory_path).load_data()

4.2.2 Count Tokens (Optional)

This optional step informs us about the token count of our text data, which is valuable for configuring our LLM’s service context. Knowing the number of tokens helps us make informed decisions about the chunk_size and max_triplets_per_chunk parameters in the next section.

Moreover, these parameters directly impact the efficiency and effectiveness of the LLM in processing the knowledge graph. A higher number of tokens might require adjustments to these values to ensure optimal performance when building and querying the graph.

# Count the number of tokens in the document

import tiktoken

def num_tokens_from_string(string: str, encoding_name: str) -> int:

"""Returns the number of tokens in a text string."""

encoding = tiktoken.get_encoding(encoding_name)

num_tokens = len(encoding.encode(string))

return num_tokens

# Function Usage

file_path = r".\data\SureSource Inc Supply Chain.txt"

with open(file_path, 'r', encoding='utf-8') as file:

text_content = file.read()

num_tokens_from_string(text_content, "cl100k_base")

4.3 Setup LLM, Service Context and Storage Context

We need to set up the Large Language Model (LLM) and the necessary contexts for interacting with the Neo4j database. The LLM, specifically OpenAI’s GPT-4 model (“gpt-4–1106-preview”). However, other models from OpenAI could be used.

Subsequently, we define the ServiceContext with a specific chunk_size parameter. This parameter determines the size of the text chunks that the LLM will process at a time. Larger chunks may provide more comprehensive context but can also introduce noise or dilute the importance of individual sentences. Conversely, smaller chunks may focus on specific details but might miss broader context.

The StorageContext is established by instantiating the Neo4jGraphStore with necessary credentials, which links the Python environment to the Neo4j database. It ensures that the interactions with the Neo4j database are efficient and aligned with the data processing needs of the LLM.

# define LLM

llm = OpenAI(temperature=0, model="gpt-4-1106-preview") # gpt-3.5-turbo-16k

service_context = ServiceContext.from_defaults(llm=llm, chunk_size=512)

# Instantiate Neo4jGraphStore and StorageContext

graph_store = Neo4jGraphStore(username=username, password=password, url=url, database="neo4j")

storage_context = StorageContext.from_defaults(graph_store=graph_store)

4.4 Using LLM to Create a Knowledge Graph

The KnowledgeGraphIndex.from_documents function is central to this process. It automates the construction of a knowledge graph from unstructured text data. This function takes in the documents we have preprocessed, along with storage_context and service_context that we set up earlier. The max_triplets_per_chunk parameter, set to 50, defines the maximum number of relationship triplets (subject-predicate-object) extracted from each chunk of text. This parameter balances the depth and breadth of the information captured in the knowledge graph.

Next, we initialize the query engine with index.as_query_engine, which allows querying the knowledge graph. Morover, the include_text=True parameter ensures that the textual content is included in the query responses, and response_mode='tree_summarize' specifies how the query response should be structured. This configuration is crucial for extracting meaningful insights from the knowledge graph.

Newsletter