Getting Started With Open-Source Latte

The ability to create high-quality videos using artificial intelligence is rapidly evolving. Recently, models like OpenAI’s SORA, along with the newly released open-source model Latte, have showcased the potential of transformer-based architectures for generating realistic videos from textual descriptions.

Latte, an open-source model akin to SORA, has just been released. This exciting development empowers individuals to train their own custom AI models for video generation. Unlike closed-source solutions, Latte grants the freedom and flexibility to tailor the video creation process to specific needs and preferences.

This democratization of access to AI video generation opens doors for a wider audience to explore and contribute to this rapidly developing field.

1. Latte: Open-Source Video Generation

The recent release of Latte marks a monumental leap forward in democratizing AI video generation. Unlike its predecessors, Latte is entirely open-source, granting anyone the freedom to access, modify, and contribute to its development. This open approach fosters a collaborative environment where researchers, developers, and even creative individuals can leverage its capabilities and push the boundaries of AI-powered video creation.

A Viable Alternative to Leading Models:

While models like OpenAI’s SORA have showcased the potential of transformer-based architectures for generating high-fidelity videos from text descriptions, their closed-source nature limits accessibility and exploration. Latte emerges as a powerful alternative, offering similar functionalities through its open-source framework.

Train Your Own Custom Video Generation Engine

The cornerstone of Latte lies in its ability to train a custom video generation model. This empowers you to:

- Craft unique video content: Train the model on a dataset specifically curated for your project. This allows Latte to capture the essence of your desired video style, be it artistic flourishes, specific motion patterns, or adherence to a particular visual aesthetic.

- Fine-tune the generation process: Latte provides the flexibility to adjust various training parameters. These parameters influence the characteristics of the generated videos, such as:

- Level of detail: Control the visual fidelity and sharpness of the generated video.

- Motion patterns: Fine-tune the flow and movement within the video frames.

- Style transfer: Incorporate elements from artistic styles or reference videos into the generated content.

By offering an open-source platform, Latte fosters a vibrant community where users can:

- Share and contribute custom training datasets: This allows for the creation of specialized models tailored to various applications, like generating explainer videos, product demonstrations, or artistic visualizations.

- Develop and experiment with new training techniques: The open-source nature facilitates collaboration and innovation, potentially leading to significant advancements in the field of AI video generation.

2. Technicals Under the Hood

At the heart of Latte lies the power of Vision Transformers (ViTs), a recent advancement in deep learning architectures. Unlike traditional Convolutional Neural Networks (CNNs) that process video frame-by-frame, ViTs offer a more comprehensive approach. They excel at capturing the intricate relationships and dependencies within video sequences by analyzing the entire frame holistically.

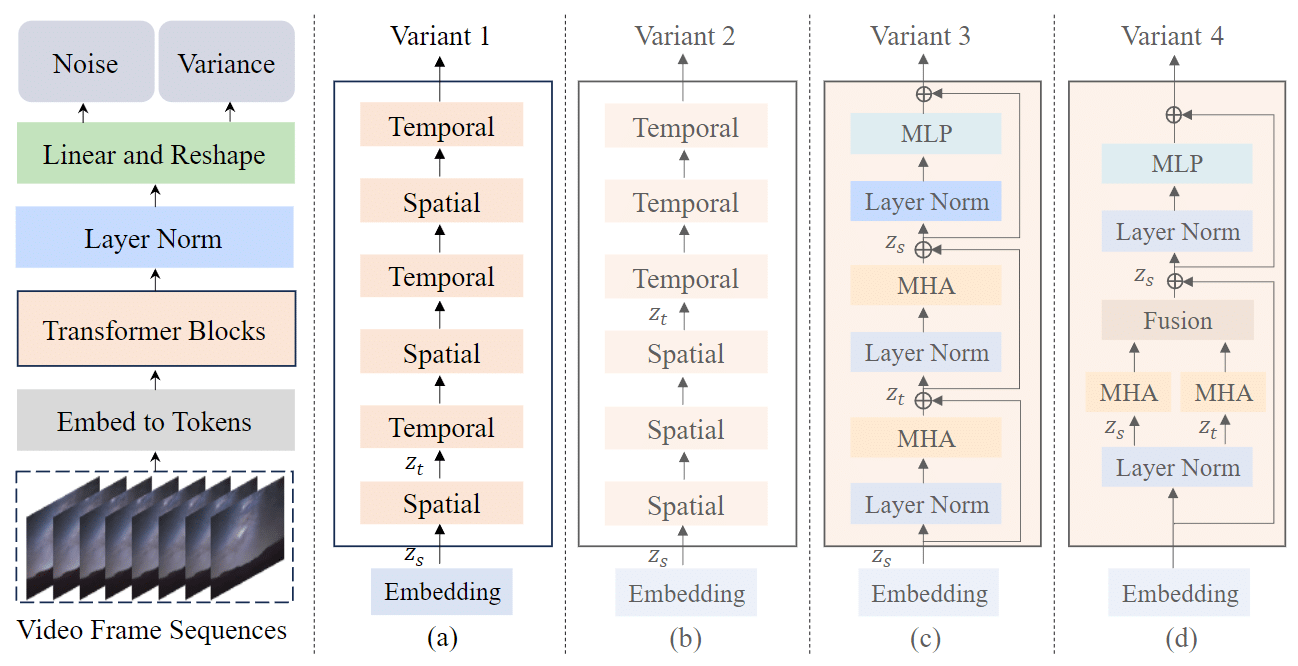

Figure. 1: Latte's Architecture

Breakdown of Latte’s Video Generation Process using ViTs:

Tokenization: Similar to how text is broken down into words, the first step involves segmenting video frames into smaller units called tokens. These tokens encapsulate the visual information present within specific regions of the frame. Techniques like patch embedding are employed to convert raw pixel information into meaningful representations suitable for the transformer architecture.

Encoder-decoder Architecture: The core of Latte lies in its encoder-decoder architecture:

- Encoder: This component processes the video tokens extracted from each frame. It utilizes a stack of transformer blocks, each consisting of a powerful self-attention mechanism and a feed-forward network:

- Self-attention: This mechanism allows the model to attend to not only the information within a single token but also the relationships between different tokens across the entire frame. This enables Latte to capture the spatial relationships between objects and their movements within the scene.

- Feed-forward network: This component further refines the encoded representation by applying non-linear transformations to the information processed by the self-attention layer.

Decoder: The decoder takes the encoded information from the encoder as input and progressively generates new video frames. It also utilizes a stack of transformer blocks, but with an additional attention mechanism that focuses on the previously generated frames. This allows the decoder to maintain temporal coherence across the generated video sequence.

Benefits of Vision Transformers for Video Generation:

- Capturing Long-range Dependencies: Unlike CNNs, ViTs excel at capturing long-range interactions within video frames due to the self-attention mechanism. This is crucial for generating realistic and temporally consistent videos, as the model can effectively model the relationships between distant objects and their movements across the scene.

- Scalability: ViT models can be efficiently scaled by increasing the number of transformer layers and attention heads within the architecture. This allows them to handle increasingly complex video data and potentially improve the quality of the generated videos.

Additional Technical Details:

- Latent Diffusion Model Integration: Latte incorporates a latent diffusion model within the decoder architecture. This model progressively adds noise to the encoded representation during the training process and learns to remove it while generating the video. This approach helps the model learn to generate diverse and realistic video outputs.

- Timestep Embedding: To model the temporal information within the video sequence, Latte employs timestep embedding. This injects information about the current generation step into the decoder, allowing it to progressively refine the generated video frame based on the previously generated frames.

Understanding these technical aspects requires a strong foundation in deep learning concepts. Readers seeking a more comprehensive understanding can refer to the following resources:

Experimental Validation

Latte’s versatility extends beyond its core functionalities. Researchers have conducted extensive evaluations to assess its performance in various video generation scenarios:

Unconditional Generation:

- Benchmark Datasets: Established datasets like Moving MNIST and Kinetics-400 were employed to evaluate the model’s ability to freely generate diverse and coherent video sequences without any specific constraints.

- Metrics: Metrics like Fréchet Inception Distance (FID) and Inception Score (IS) were used to quantify the realism and quality of the generated videos.

- Observations: Latte demonstrated exceptional performance in unconditional generation tasks, producing visually appealing and temporally consistent video sequences that exhibit a high degree of diversity.

Taichi-HD

FaceForensics

SkyTimelapse

Conditional Generation based on Classes:

- Evaluation Setup: The model was trained on datasets categorized based on specific video classes (e.g., walking, dancing, jumping).

- Metrics: Similar to unconditional generation, FID and IS were used alongside human evaluation to assess the generated videos’ adherence to the designated classes.

- Findings: Latte effectively leveraged class information to produce videos that visually embodied the characteristics of the targeted categories. Human evaluations further confirmed the model’s ability to generate class-specific content with a high degree of accuracy.

UCF101

Conditional Generation based on Prompts:

- Experimental Design: Users provided detailed textual descriptions outlining desired content, objects, actions, and the overall mood for the video generation.

- Evaluation: The generated videos were assessed based on their faithfulness to the provided prompts. Metrics like BLEU score (measures similarity between generated text and prompt) and human evaluation were employed.

- Outcomes: Latte exhibited remarkable capabilities in translating textual descriptions into corresponding video sequences. The generated videos displayed a high degree of alignment with the prompts, showcasing the model’s potential for creative text-to-video applications.

3. Get Started With Inference

This section provides a basic introduction to using pre-trained Latte models for video generation. This is based on the original repo: https://github.com/Vchitect/Latte

Please note: This is a preliminary guide. A more comprehensive user guide and an accompanying notebook for running Latte will be available soon. In the meantime, let’s delve into the initial steps.

Pre-requisites:

- Environment Setup:

- Ensure you have Python and PyTorch installed. Refer to the provided

environment.ymlfile for creating a suitable Conda environment. - Activate the created environment using

conda activate latte.

- Ensure you have Python and PyTorch installed. Refer to the provided

Downloading Pre-trained Models:

Latte offers pre-trained models trained on various datasets. These can be found in the repository.

Sampling Videos:

The

sample.pyscript facilitates video generation using pre-trained models.- Example (FaceForensics dataset):

bash sample/ffs.sh

- DDP for Large-scale Sampling:

For generating hundreds of videos simultaneously, leverage the script:

bash sample/ffs_ddp.sh

Text-to-Video Generation:

For text-based video generation, download the required models (t2v_required_models) and run:

bash sample/t2v.sh

Customizing Sampling:

The sample.py script offers various arguments to control sampling behavior:

- Adjust sampling steps.

- Modify the classifier-free guidance scale.

Additional Notes:

- Detailed information on available arguments and functionalities can be found within the script itself.

- Consult the repository’s documentation for further guidance on advanced usage scenarios.

4. Custom Latte Training

Please refer to https://github.com/lyogavin/train_your_own_sora for further details.

Step 1: Setup the environment

Download and set up the repo:

git clone https://github.com/lyogavin/Latte_t2v_training.git

conda env create -f environment.yml

conda activate latte

Step 2: Download the pretrained model

Download the pretrained model as follows

sudo apt-get install git-lfs # or: sudo yum install git-lfs

git lfs install

git clone --depth=1 --no-single-branch https://huggingface.co/maxin-cn/Latte /root/pretrained_Latte/

Step 3: Prepare training data

Put video files in a directory and create a csv file to specify the prompt for each video.

The csv file format:

video_file_name | prompt

_______________________________

VIDEO_FILE_001.mp4 | PROMPT_001

VIDEO_FILE_002.mp4 | PROMPT_002

...

Step 4: Config

Config is in configs/t2v/t2v_img_train.yaml and it’s pretty self-explanotary.

A few config entries to note:

- point

video_folderandcsv_pathto the path of training data - point

pretrained_model_pathto thet2v_required_modelsdirectory of downloaded model. - point

pretrainedto the t2v.pt file in the downloaded model - You can change

text_promptundervalidationsection to the testing validation prompts. During the training process everyckpt_everysteps, it’ll test generating videos based on the prompts and publish to wandb for you to checkout.

Step 5: Train

./run_img_t2v_train.sh

Related Articles

Google Introduces VideoPoet: Multimodal Video Generation

Meta Introduces Ego-Exo4D: A Dataset For Video Learning

Narrating Videos With OpenAI Vision And Whisperer Automatically

Newsletter