A Technical End-to-End Python Guide to Automatic Web Browsing and Results Reporting

Imagine the convenience of automatically gathering and summarizing online information on diverse topics such as the latest advancements in renewable energy, analyzing current market trends in the tech industry, or compiling comprehensive research on public health issues. We call this process “intelligent web browsing”.

Additionally, this article presents an end-to-end Python solution, utilizing LangChain and the OpenAI API, designed to simplify this process. LangChain, a tool for constructing language model chains, works hand-in-hand with the OpenAI API, a system for interacting with large language models, to efficiently execute online searches, aggregate data from multiple sources, and automatically generate detailed reports.

Furthermore, the practicality of this code lies in its ability to transform a complex, often labor-intensive task into a streamlined, automated process. By quickly retrieving and summarizing relevant online information, it not only saves time but also ensures a comprehensive and nuanced understanding of the topic at hand.

1. Overview of LangChain and Key Components

1.1 LangChain

LangChain serves as a versatile framework for developing applications powered by language models. It acts as a tool for building language model chains, enabling users to connect a language model to various sources of context and reason based on the provided context.

Additionally, LangChain’s primary value stems from its composable tools and integrations for working with language models. It offers both built-in chains for high-level tasks and components for customizing existing chains or building new ones.

The integration of specific components in this Python implementation enhances web browsing and reporting:

OpenAI Embeddings

This component interfaces with OpenAI’s language models to generate text embeddings. Moreover, these embeddings are crucial for semantic search and understanding textual context, which are essential for retrieving relevant information based on queries.

FAISS

FAISS, a library for efficient similarity search and clustering of dense vectors, is used in tandem with OpenAI Embeddings to store and search embeddings, aiding in the quick retrieval of pertinent information.

ChatGPT

An interface for interactive and conversational interactions with OpenAI’s GPT models, enhancing the system’s capability to handle queries in a chat-like format.

GoogleSearchAPIWrapper

This wrapper for the Google Search API allows the system to perform web searches and retrieve online information based on user queries.

Moreover, the system’s strength lies in combining the contextual understanding and reasoning capabilities of language models (through OpenAI Embeddings and ChatGPT) with the data retrieval and search capabilities (via FAISS and GoogleSearchAPIWrapper).

2. Setting Up the Environment

To implement the solution presented in this article, there are several technologies that need to be setup correctly first.

Namely LangChain, OpenAI API, and the Google Search API. Here’s a guide to help you through this process.

2.1 Installation and Dependencies

Install LangChain and Google API Client Libraries for interacting with Google’s services

pip install langchain google-api-python-client google-auth-httplib2 google-auth-oauthlib

2.2 Setting Up API Keys and Environment Variables

1. Google API Key:

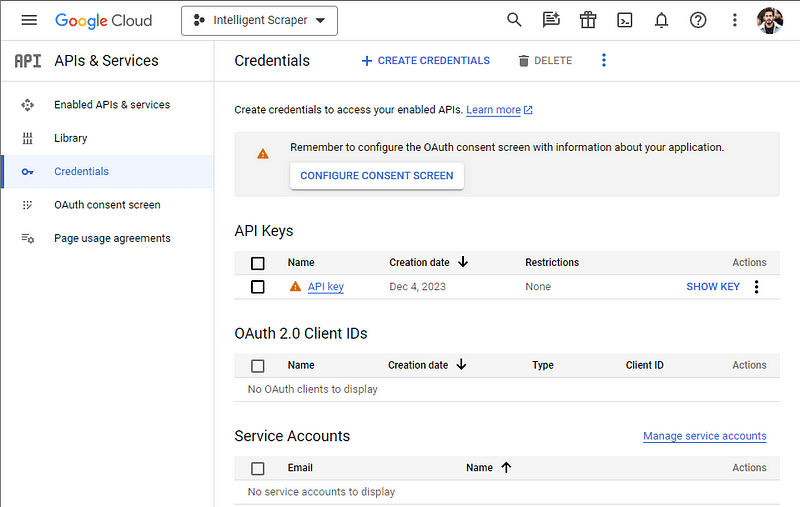

- Create an API key from the Google Cloud Credentials Console (Google Cloud Console) and retrieve the API key. See screenshot below for reference.

Figure. 1: A Screenshot of the Google Cloud Platform API & Services Credentials dashboard, showing the creation of an API key.

- Navigate to APIs & Services → Dashboard in the Google Cloud Console and enable the Custom Search API.

2. Google Custom Search Engine ID (CSE ID):

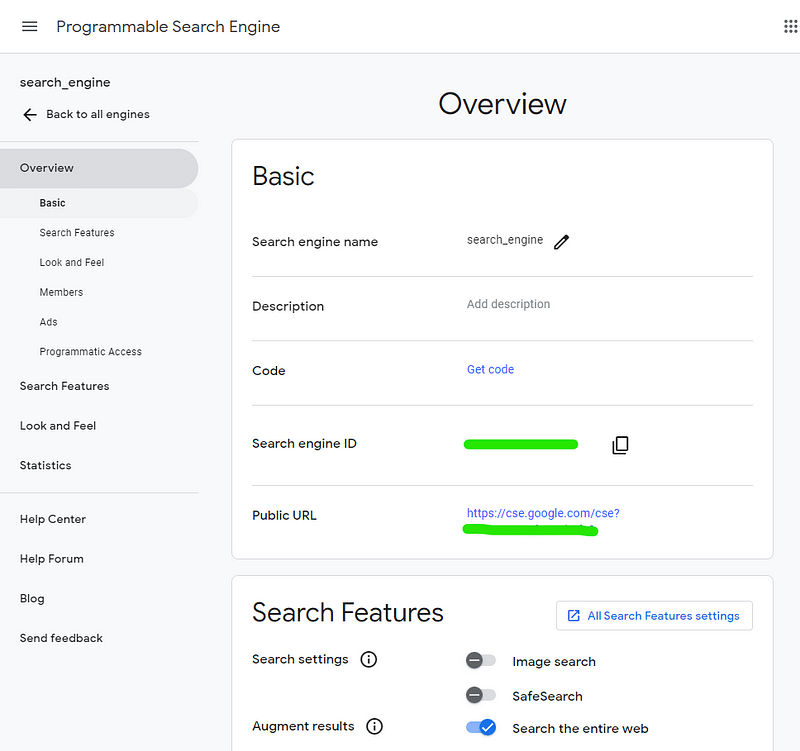

- Create a Custom Search Engine at Programmable Search Engine. Retrieve the Search engine ID which will be used later. See image below for reference.

Figure. 2: An interface of Google's Programmable Search Engine management page, showing the basic settings for a user-created search engine including its name, description, and the Search Engine ID.

3. Python Implementation

This section dives deeply into the Python implementation designed to automate the retrieval and summarization of web content using LangChain, OpenAI, and Google’s Search API. Initially, we’ll discuss the key elements, and then provide the complete code at the end.

3.1 Building the Web Research Retriever

The WebResearchRetriever class integrates various components to fetch and process web-based information.

Below, we detail the initialization process and the components involved, along with their corresponding code snippets.

Initialization of Components:

- OpenAI Embeddings: These generate text embeddings to enable semantic searches and enhance contextual understanding.

- FAISS Index: This stores and retrieves text embeddings efficiently.

- In-Memory Docstore: This stores documents temporarily during the retrieval process.

- ChatOpenAI: This allows interactive and conversational interactions with OpenAI’s GPT models.

- GoogleSearchAPIWrapper: This performs web searches using Google’s API.

We initialize each component in the code as follows:

from langchain.retrievers.web_research import WebResearchRetriever

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.vectorstores import FAISS

from langchain.docstore import InMemoryDocstore

import faiss

# Initializing OpenAI Embeddings

embeddings_model = OpenAIEmbeddings()

# Setting up FAISS Index

embedding_size = 1536

index = faiss.IndexFlatL2(embedding_size)

vectorstore_public = FAISS(embeddings_model.embed_query, index, InMemoryDocstore({}), {})

# Creating the WebResearchRetriever

web_retriever = WebResearchRetriever.from_llm(

vectorstore=vectorstore_public,

llm=llm,

search=search,

num_search_results=3

)

The WebResearchRetriever.from_llm() method is responsible for initializing the retriever with the components mentioned above. It utilizes the vector store, language model, and Google search to obtain and process the data, aiming to return a user-defined number of search results.

3.2 The Retrieval and Reporting Process

The retrieval and reporting process plays a vital role in the system, actively fetching relevant data in response to user queries and presenting this information in a structured and understandable manner.

Let’s delve deeper into how this process unfolds through the RetrievalQAWithSourcesChain class and the PrintRetrievalHandler.

- Retrieval Process:

Central to the operation, the RetrievalQAWithSourcesChain harnesses a language model alongside the web retriever to actively process user queries.

Upon receiving a query, it immediately conducts a search to identify documents that provide the required information.

Moreover, when a query is issued, the chain conducts a search to find relevant documents that can provide the necessary information.

Significantly, it selects these documents based on the semantic similarity of their content to the query, a determination made possible by the embeddings generated earlier.

from langchain.chains import RetrievalQAWithSourcesChain

# Set up the ChatOpenAI model

llm = ChatOpenAI(model_name="gpt-3.5-turbo-16k", temperature=0, streaming=True)

# Set up the GoogleSearchAPIWrapper

search = GoogleSearchAPIWrapper()

# Instantiate the retrieval chain

qa_chain = RetrievalQAWithSourcesChain.from_chain_type(llm, retriever=web_retriever)

2. Reporting Process:

The PrintRetrievalHandler is a subclass of BaseCallbackHandler that manages the formatting and output of the retrieval results.

Moreover, during the retrieval process, it captures key events to log the query and summarize each document.

This is done through two main methods:

on_retriever_start: Logs the beginning of a retrieval process.on_retriever_end: Iterates through the retrieved documents to compile a summary.

Here is an example of how PrintRetrievalHandler is defined and used:

class PrintRetrievalHandler(BaseCallbackHandler):

def __init__(self):

self.results = []

def on_retriever_start(self, query: str, **kwargs):

print(f"Fetching information for: {query}")

def on_retriever_end(self, documents, **kwargs):

for doc in documents:

# Summarization logic goes here

# Append results with source, title, URL, and summary

Furthermore, in this handler, when the retrieval ends, it uses OpenAI’s ChatCompletion API to generate a succinct summary of each document.

Moreover, this can be particularly useful when the documents are extensive, and the user needs concise information. The handler then appends this summary, along with the document’s source and title, to the results.

Finally, the results are printed out, providing the user with an answer to their query, accompanied by a summary of each source that contributed to the answer. Here is how the final output might look:

# Assuming 'result' contains the answer and source information from the retrieval process

print("\nAnswer:\n")

print(result['answer'])

print("\nSources and Summaries:\n")

for summary in retrieval_streamer_cb.results:

print(summary)

3.3 Complete Code

Lastly, putting all of the above logic together, we get the following code to perform intelligent web browsing:

from langchain.callbacks.base import BaseCallbackHandler

from langchain.chains import RetrievalQAWithSourcesChain

from langchain.retrievers.web_research import WebResearchRetriever

import faiss

from langchain.vectorstores import FAISS

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.docstore import InMemoryDocstore

from langchain.chat_models import ChatOpenAI

from langchain.utilities import GoogleSearchAPIWrapper

import openai

import os

import logging

os.environ["GOOGLE_API_KEY"] = "" # Get it at https://console.cloud.google.com/apis/api/customsearch.googleapis.com/credentials

os.environ["GOOGLE_CSE_ID"] = "" # Get it at https://programmablesearchengine.google.com/

os.environ["OPENAI_API_BASE"] = "https://api.openai.com/v1"

os.environ["OPENAI_API_KEY"] = "" # Get it at https://openai.com/account/api-keys

openai.api_key = "" # Get it at https://openai.com/account/api-keys

# Initialize required components

embeddings_model = OpenAIEmbeddings()

embedding_size = 1536

index = faiss.IndexFlatL2(embedding_size)

vectorstore_public = FAISS(embeddings_model.embed_query, index, InMemoryDocstore({}), {})

llm = ChatOpenAI(model_name="gpt-3.5-turbo-16k", temperature=0, streaming=True)

search = GoogleSearchAPIWrapper()

web_retriever = WebResearchRetriever.from_llm(

vectorstore=vectorstore_public,

llm=llm,

search=search,

num_search_results=3 #This should be adjusted depending on how many sources are desired

)

class PrintRetrievalHandler(BaseCallbackHandler):

def __init__(self):

self.results = []

def on_retriever_start(self, query: str, **kwargs):

self.results.append(f"**Question:** {query}\n")

def on_retriever_end(self, documents, **kwargs):

for idx, doc in enumerate(documents):

source = doc.metadata.get("source", "Source not available")

title = doc.metadata.get("title", "Title not available")

snippet = doc.page_content[:2000] # Using a snippet for summary

# Generate summary for each document using the provided method

summary_prompt = f"Please summarize the following content from '{title}' ({source}):\n\n{snippet}"

summary = self.extract_chunk(summary_prompt)

# Append results with source, title, URL, and summary

self.results.append(f"**Source:** {source}\n**Title:** {title}\n**Summary:**\n{summary}\n")

def extract_chunk(self, content):

"""

Applies a prompt to some input content and extracts the response from OpenAI's ChatCompletion API.

"""

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-16k", messages=[{"role": "user", "content": content}], temperature=0

)

return response["choices"][0]["message"]["content"]

# User input

question = "What is does the SP500 tend to do in January?"

# Instantiate the PrintRetrievalHandler

retrieval_streamer_cb = PrintRetrievalHandler()

# Perform retrieval and answer generation

qa_chain = RetrievalQAWithSourcesChain.from_chain_type(llm, retriever=web_retriever)

result = qa_chain({"question": question}, callbacks=[retrieval_streamer_cb])

# Print the answer and sources with summaries

print("\nMain Answer:\n")

print(result['answer'])

print("\nSources and Summaries:\n")

for item in retrieval_streamer_cb.results:

print(item)

Figure. 3: An example of an AI-generated response to a financial query, showcasing the main answer regarding the S&P 500 January Effect, followed by a list of sources and summaries providing additional insights and perspectives.

3.4 Bonus — Generating the Report

Transforming the information retrieved through intelligent web browsing into a cohesive report can be useful. We create a script and in particular acreate_pdf function to methodically construct a PDF from collected data.

Newsletter