Video Synthesis and Super-Resolution Techniques of Decoder-Only Transformer Architectures

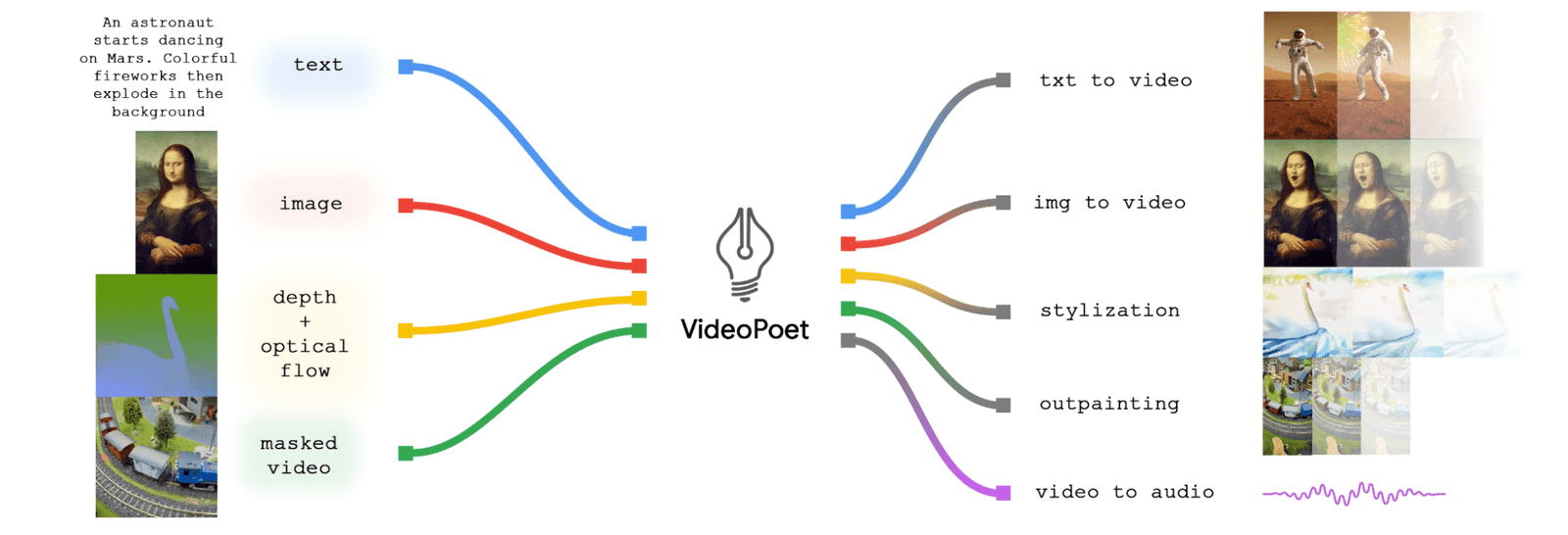

Developed by Google Research, VideoPoet skillfully blends images, videos, text, and audio. It employs a decoder-only transformer architecture. This setup adheres to LLM training protocols for achieving multimodal objectives.

Moreover, VideoPoet excels in versatility. It turns text or image prompts into high-quality videos with precision. It also performs video editing tasks like inpainting and outpainting. Furthermore, it expertly synchronizes videos with matching audio.

1. Capabilities of VideoPoet

- Text-to-Video: Text-to-Video: VideoPoet renders text into full-motion videos. For instance, it can depict an astronaut dancing on Mars against fireworks, showing its narrative understanding.

- Image-to-Video: The model’s ability to animate static images is demonstrated with the Mona Lisa, transforming the portrait into a video where she appears to sing, adding a temporal dimension to the painting.

- Depth and Optical Flow: VideoPoet’s application of depth and optical flow is shown by converting a swan silhouette into a video that simulates the swan swimming, reflecting the model’s adeptness in creating depth and motion.

- Stylization: The model can also artistically alter video content. An example is the transformation of a standard landscape into a stylized animation, demonstrating VideoPoet’s versatility in video stylization.

- Outpainting: VideoPoet expands the visual boundary of a scene through outpainting, as shown by extending the narrative space beyond the original frame, which allows for more expansive storytelling within the video.

- Video to Audio: Completing the multimodal experience, VideoPoet synthesizes audio tracks to accompany the visual content, ensuring a synchronized audio-visual output as evidenced by the corresponding waveform visualizations.

2. Advantages and Technical Details

VideoPoet’s architecture is key for video synthesis. It generates sequential data adeptly. This data forms the backbone of video frames and audio samples. It is this architecture that allows VideoPoet to produce coherent video sequences from varied inputs such as a single frame or a descriptive text prompt, as depicted in figure 2.

Furthermore, figure 2 clarifies VideoPoet’s bidirectional attention prefix mechanism. Here, various tokens pass through an encoder before generating output autoregressively. This process involves a complex interplay of different modalities, each contributing to the final synthesized video and audio. The model leverages the MAGVIT-v2 and SoundStream encoders for visual and audio tokenization, respectively, integrating these modalities with text tokens to forecast the next sequence of visual and audio tokens in a unified vocabulary.

2.1 Videopoet Architecture

2.2 Super-Resolution Module

Moreover, VideoPoet features a super-resolution module. As Image 2 demonstrates, this module efficiently upscales video resolutions. The architecture capitalizes on the division of labor across temporal and spatial dimensions, addressing high-resolution output tokens through a multi-head classification and merging strategy. This setup is vital for refining visual details while ensuring the model remains computationally efficient.

Furthermore, the training of VideoPoet occurs on a diverse dataset. This enriches its capacity to grasp and mimic a wide range of visual and auditory styles. Such a rich dataset is instrumental for the model to learn complex temporal dependencies within video data. The model’s ability to handle these dependencies is reflected in its proficiency at producing high-quality, continuous video content that aligns with the conditioning inputs, whether they be text descriptions or a series of images.

3. Examples and Applications

VideoPoet has extensive applications. For instance, Google Research demonstrates its capability to transform text into complex scenes like an astronaut’s dance on Mars. showcases the model’s potential for entertainment and educational content creation.

The animation of classical artwork into a video sequence can serve as an innovative tool for digital museums and galleries. VideoPoet’s stylization techniques could revolutionize advertising and video production, offering new ways to rebrand existing content.

3.1 Long video

VideoPoet’s ability to produce long video sequences stands out. It crafts extended, coherent, and context-rich narratives. This capability involves understanding and maintaining the narrative flow over time, ensuring that each subsequent frame is a logical progression from the last.

Figure below is an example of this, where a robot character is depicted across a sequence of frames, showcasing the model’s ability to sustain narrative over an extended duration. Moreover, this ability has significant implications for fields such as animation and filmmaking, where long-form content generation is essential.

3.2 Image to video control

VideoPoet’s image-to-video control transforms static images into lively video sequences. This function allows for the reanimation of for example artworks, as seen in figure below with the Mona Lisa. The model adds a dimension of time to the image, creating a sequence where the painting’s subject can perform actions such as singing.

Moreover, this sophisticated capability leverages the model’s understanding of human expressions and movements to produce realistic animations that go beyond mere facial manipulations, breathing life into the once-immovable figures of classical art.

3.3 Camera motion

Simulating camera motion is a complex task that VideoPoet handles with finesse. By synthesizing camera movements, the model can create videos that mimic the effect of a camera panning, tilting, or zooming within a scene. Moreover, this capability enriches the storytelling by adding a cinematic quality to the videos, making them more engaging and realistic. The image below demonstrates this capability, where the model applies simulated camera motion to a static image, giving the illusion of dynamic perspective changes and depth, which are crucial in immersive video experiences.

3.4 Audio Generation

Audio generation forms a crucial part of the multimedia experience. VideoPoet skillfully generates audio that aligns with visual content. The model produces not just visually appealing content but also an accompanying soundscape that enhances the overall sensory experience. Moreover, this feature is essential for creating content where the audio cues are just as important as the visual ones, such as in movies, video games, and virtual reality applications.

4. Evaluation and Results

The evaluation rigorously analyzed VideoPoet’s text-to-video synthesis, comparing it to other models. Evaluators focused on the model’s accuracy in reflecting text prompts, a crucial aspect of the generative process.

Furthermore, Human evaluators were engaged to rate the videos generated by VideoPoet alongside outputs from other models such as Phenaki, VideoCrafter, and Show-1. The evaluation criteria centered on text fidelity—the accuracy with which the video reflects the descriptive text prompt. As depicted in the image, VideoPoet was preferred by a significant margin over the alternatives, with a substantial portion of evaluators showing no preference, and only a minority favoring the other models.

The results underscore VideoPoet’s ability to understand and visualize complex descriptions accurately, producing videos that align closely with the given text. This outcome not only highlights VideoPoet’s effectiveness in creating relevant and detailed videos but also showcases the model’s advanced understanding of language and visual representation, crucial for tasks requiring a high level of semantic coherence between text and generated video content.

Moreover, the evaluation process underscores VideoPoet’s potential as a tool for various applications where accurate text-to-video synthesis is paramount. This could include educational content where detailed visualizations of textual information are beneficial, or in entertainment, where scripts are brought to life with precise visual storytelling. The evaluators’ preferences indicate the model’s readiness for practical deployment and set a benchmark for future improvements in the field of video generation AI.

5. Future Directions

Looking forward, VideoPoet’s framework heralds new advancements in video generation. Future developments could enhance content control, resolution, realism, and even real-time video generation.

As technology progresses, VideoPoet may become a foundational tool for creators and technologists alike, paving the way for more sophisticated and creative applications of AI in video production.

A particular strength of Google’s model lies in its ability to generate high-fidelity, large, and complex motions. Their LLM formulation benefits from training across a

variety of multimodal tasks with a unified architecture and vocabulary.

Moreover, VideoPoet exemplifies the cutting-edge of video generation technology, offering a glimpse into the future of AI-powered media creation. Its capabilities, from rendering text descriptions into full-fledged video scenes to animating classic images, highlight its potential impact across various domains, from education and entertainment to art and advertising.

Related Articles

Microsoft Announces TaskWeaver, User Defined Adaptive Analytics

Newsletter