A Highly Capable Language Model

Fine-tuning Phi-3, a compact yet powerful AI model, has emerged as a powerful technique. Fine-tuning allows Phi-3 to specialize for specific tasks and domains.

The Phi-3 series targets efficient AI on mobile and low-power devices. Moreover, the 3.8 billion parameter Phi-3-mini punches above its weight on benchmarks. Additionally, it can run locally on smartphones, enhancing privacy and reducing data transmission.

Phi-3’s transformer architecture optimizes for understanding and generating natural language. Furthermore, variants like Phi-3-mini-128k offer extended context capabilities for different use cases. In addition, this flexibility enables real-time mobile applications without compromising speed or accuracy.

While excelling at reasoning, math, and code tasks, Phi-3 lags in factual knowledge retention. However, fine-tuning could address this limitation by adapting the model for specialized knowledge domains. Likewise, techniques like LoRA allow efficient transfer learning on Phi-3.

This article will dive into the fine-tuning process for Phi-3. First, we’ll explore how to load and prepare the pre-trained model. Next, we’ll walk through data preprocessing steps to get datasets ready for fine-tuning.

The core section covers setting up the training configuration, initiating the fine-tuning process, and evaluating the resulting model’s performance. Finally, we’ll demonstrate how to deploy and run inference with the fine-tuned Phi-3 model.

1. Overview of Phi-3 Model

The Phi-3 series by Microsoft features compact language models optimized for operation on mobile and other low-power devices. Notably, the Phi-3-mini, with 3.8 billion parameters, stands out in AI benchmarks for its performance, despite its smaller size compared to larger models. It is also available as open-source on platforms like Azure and Hugging Face (TECHCOMMUNITY.MICROSOFT.COM) (Gadgets 360).

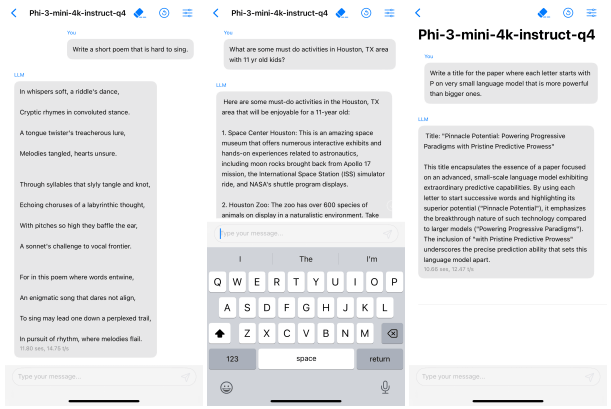

Phi-3-mini enables developing mobile apps with AI capabilities like real-time language translation and enhanced assistants. This model operates directly on smartphones, enhancing functionality without relying on cloud computing. Running locally benefits privacy and reduces data transmission (Techopedia).

Architectural Design and Performance

Phi-3 is engineered for efficient language comprehension and generation. It offers multiple configurations, such as Phi-3-mini-4k and extended contex Phi-3-mini-128k. These variants cater to different needs, from mobile devices to web browsers. This flexibility allows deploying real-time applications without compromising speed or accuracy.

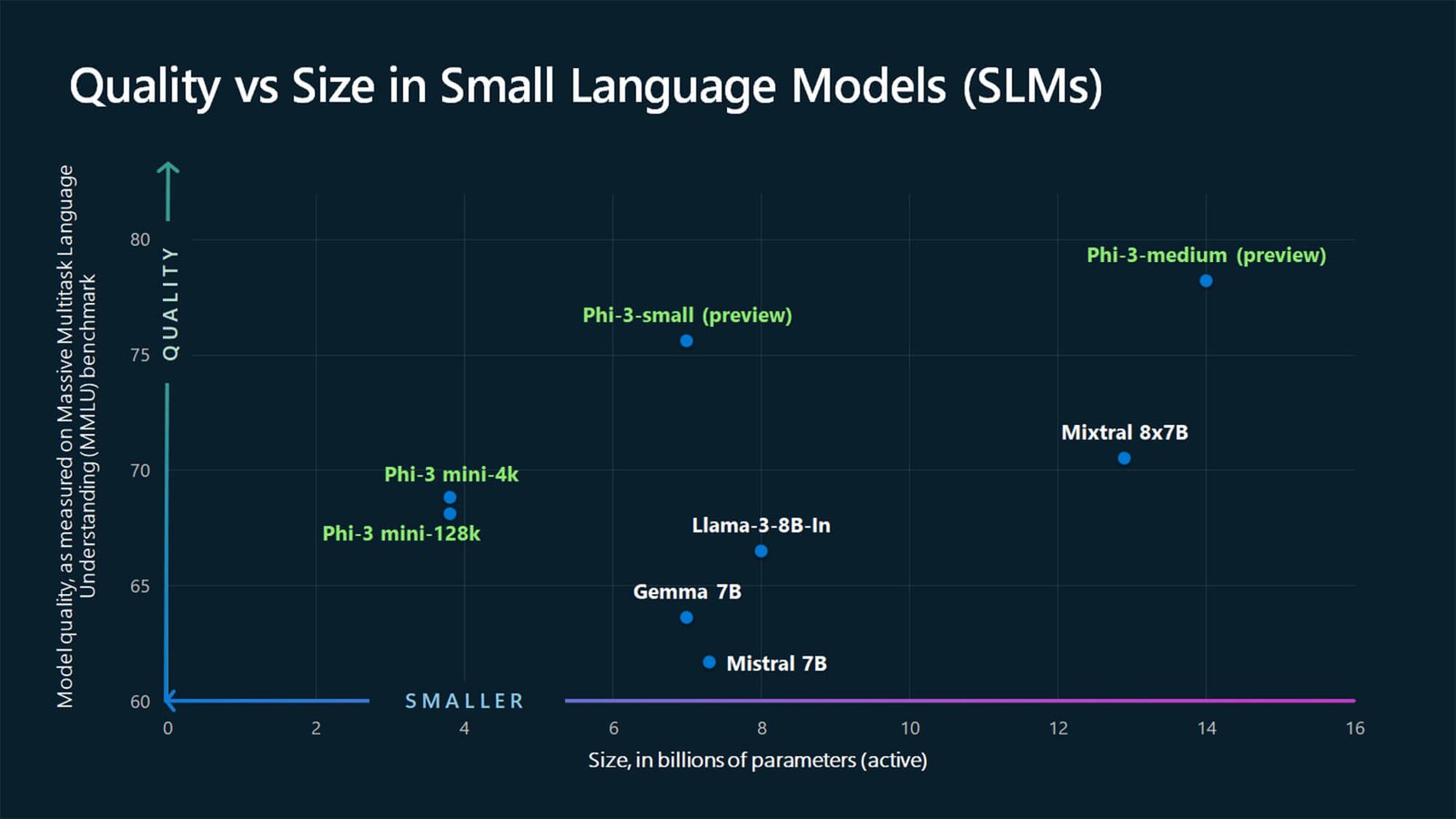

The image below compares quality versus size for small language models. It shows three Phi-3 variants from Microsoft – Phi-3-mini-4k, Phi-3-mini-128k, and Phi-3-small. These models are compact, ranging from around 4 billion to 6 billion parameters.

Despite being smaller, Phi-3 models achieve high quality scores on benchmarks. They outperform some larger models like Mistral 7B and Gemma 7B. The Phi-3-medium preview likely has higher capacity and scores even better.

Real-World Applications

Due to its compact size and strong performance, the model has deployed successfully on devices like the iPhone 14. This demonstrates practical use cases.

Benchmarking Success

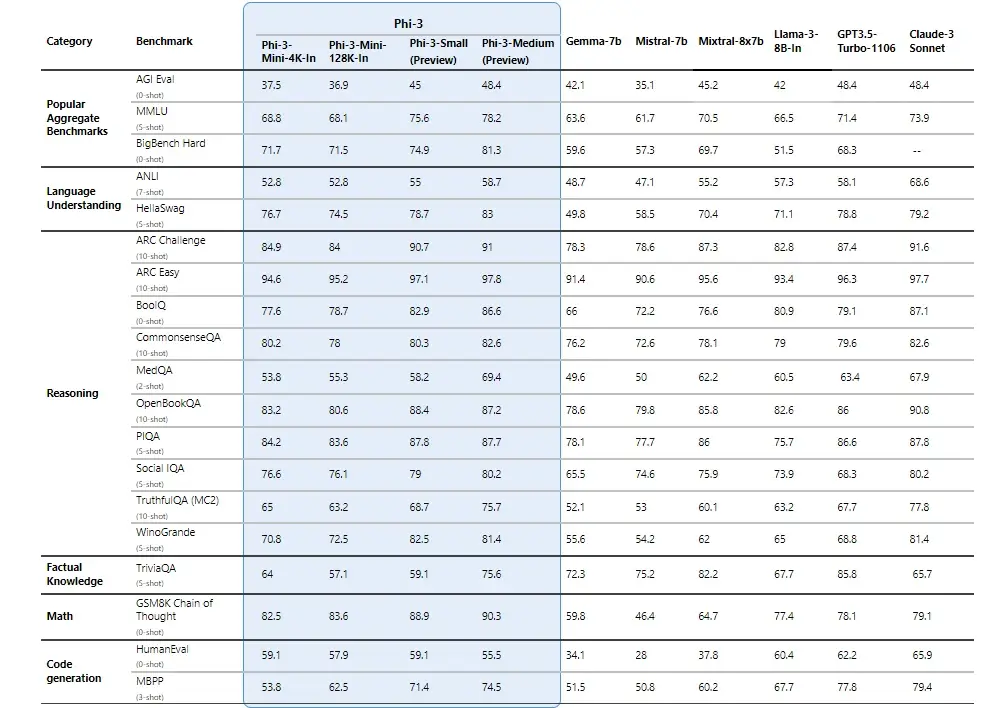

In benchmarks, Phi-3 exhibits strong capabilities, performing comparably to larger models like Gemma-7b and Mixtral-8x7b across benchmarks like AGI Eval, MMLU, and HellaSwag.

While Phi-3-mini excels in benchmarks tasks like reasoning, math, and code generation, its smaller size limits factual knowledge retention, impacting performance on such tasks.

2. Significance of Fine-Tuning

Fine-tuning allows us to specialize large language models like Phi-3 for specific tasks or domains. While these models demonstrate impressive general capabilities, fine-tuning enhances their performance on targeted use cases.

For instance, we may fine-tune Phi-3 on a dataset of medical texts. This specialization improves its understanding and generation of medical terminology and concepts.

Similarly, fine-tuning on legal documents can enhance Phi-3’s skills in understanding and generating legal language and reasoning. This makes the model more effective for legal research, contract analysis, or even drafting basic legal documents.

The process essentially adapts the model’s knowledge and behavior to a particular domain’s data distribution and language patterns. Fine-tuning leverages the model’s general foundations while refining it for domain-specific tasks.

Importantly, fine-tuning is parameter-efficient compared to full retraining. Techniques like LoRA (Low-Rank Adaptation) allow updating only a small portion of the model’s parameters.

Fine-tuning also allows continual learning and knowledge expansion for large language models. We can iteratively fine-tune on new data sources, building up the model’s capabilities over time.

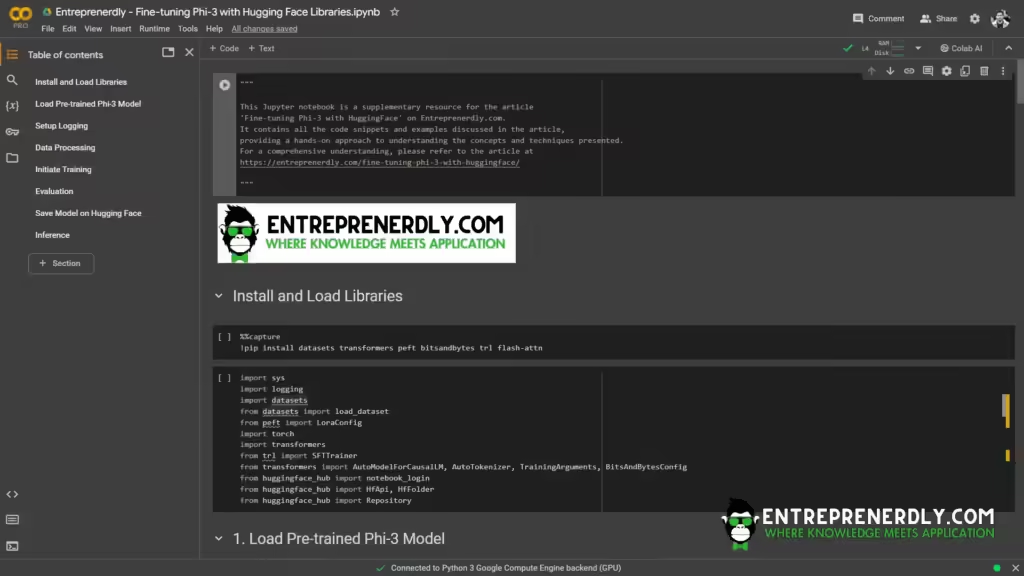

3. Fine-Tuning Phi-3 Code

Installing and Loading Libraries

!pip install datasets transformers peft bitsandbytes trl flash-attn

import sys

import logging

import datasets

from datasets import load_dataset

from peft import LoraConfig

import torch

import transformers

from trl import SFTTrainer

from transformers import AutoModelForCausalLM, AutoTokenizer, TrainingArguments, BitsAndBytesConfig

from huggingface_hub import notebook_login

from huggingface_hub import HfApi, HfFolder

from huggingface_hub import Repository

Load Pre-trained Phi-3 Model

First, specify the model’s checkpoint path. For Phi-3, two versions are available depending on the needed context length.

Here’s how to think about and execute this setup:

Choosing the Correct Model Version: Phi-3 comes in two variants, one suited for shorter contexts (4k tokens) and another for longer contexts (128k tokens). The choice depends on the complexity of the tasks you’re addressing. For standard tasks, the 4k token model is ideal. For deeper analysis or complex dialogue, the 128k model is better suited.

- Optimizing Model Loading: Loading parameters balance performance and efficiency, vital in resource-limited environments. Opting for torch.bfloat16 over the usual torch.float16 allows for nearly full precision without heavy memory use. This choice benefits production environments where efficiency is key.

- Configuring the Tokenizer: Aligning tokenizer settings with model requirements optimizes data processing, preventing truncation errors and excessive memory use. Properly setting

model_max_lengthptimizes computational load. Adjusting this parameter cuts processing time for each batch, boosting efficiency during model training and inference. This is needed in scenarios where the model processes varying input lengths dynamically.

# Define the checkpoint path for the pre-trained Phi-3 model.

# Two versions are available: one with a maximum context of 4k tokens and another with 128k tokens.

# Uncomment the version you wish to use based on your context requirement.

checkpoint_path = "microsoft/Phi-3-mini-4k-instruct"

# checkpoint_path = "microsoft/Phi-3-mini-128k-instruct"

# Define model loading parameters to customize how the model is loaded into the environment.

model_kwargs = dict(

use_cache=False, # Disable caching to reduce memory usage, beneficial for systems with limited RAM.

trust_remote_code=True, # Allow the model to execute remote code, necessary for models that include custom implementation.

attn_implementation="flash_attention_2", # Use the 'flash_attention_2' implementation to speed up the model by optimizing attention mechanisms.

torch_dtype=torch.bfloat16, # Use bfloat16 data type for tensors to strike a balance between performance and precision.

device_map=None # Automatically allocate model layers to available devices, e.g., distributing across multiple GPUs if available.

)

# Load the pre-trained model using the specified checkpoint and additional parameters.

# This includes settings that affect performance and compatibility with specific hardware.

model = AutoModelForCausalLM.from_pretrained(checkpoint_path, **model_kwargs)

# Load the tokenizer associated with the Phi-3 model from the same checkpoint.

# Tokenizers break text into tokens that the model can understand.

tokenizer = AutoTokenizer.from_pretrained(checkpoint_path)

# Set the maximum length of model inputs (number of tokens) to 2048.

# This limit is often set based on the model's design and the expected complexity of tasks it will handle.

tokenizer.model_max_length = 2048

# Configure the tokenizer's padding token.

# Here, 'unk' (unknown) token is used for padding. This helps handle cases where the input length is less than the maximum allowed.

# Using 'unk' avoids potential issues with end-of-sequence tokens causing premature stopping during text generation.

tokenizer.pad_token = tokenizer.unk_token

tokenizer.pad_token_id = tokenizer.convert_tokens_to_ids(tokenizer.pad_token) # Get the numeric ID for 'unk' token, required for processing.

tokenizer.padding_side = 'right' # Specify padding to be added to the right side of the sequence, standard for many NLP tasks.

Configure Training Arguments

When setting up the training configuration for fine-tuning Phi-3, it’s important to consider both performance optimization and resource management.

Consider:

Disabling Evaluation: Turning off evaluation during training is not common practice but can significantly speed up the training process. This is particularly useful when iterative testing and quick modifications are required.

Cosine Learning Rate Scheduler: A cosine learning rate scheduler, unlike linear or exponential decay, follows a cosine curve. This method often improves convergence by gently reducing the learning rate as each epoch progresses.

Gradient Checkpointing with No Reentrancy: Saves memory by storing only essential parts of the computation graph. Turning off reentrant execution simplifies this process, enhancing memory efficiency.

# Define training configuration settings

training_config = {

"bf16": True, # Use bf16 precision for model parameters to optimize performance and reduce memory usage

"do_eval": False, # Disable evaluation during training to speed up the process

"learning_rate": 5.0e-06, # Set a very low learning rate for fine-tuning

"log_level": "info", # Set logging level to 'info' to get detailed logs without too much verbosity

"logging_steps": 20, # Log training progress every 20 steps

"logging_strategy": "steps", # Logging is based on the number of training steps

"lr_scheduler_type": "cosine", # Use a cosine learning rate scheduler for gradual learning rate decrease

"num_train_epochs": 1, # Set the number of training epochs to 1 for quick fine-tuning

"max_steps": -1, # Use an unlimited number of steps unless specified otherwise

"output_dir": "./checkpoint_dir", # Specify the directory to save model checkpoints

"overwrite_output_dir": True, # Allow overwriting the output directory (useful for repeated runs)

"per_device_eval_batch_size": 4, # Set evaluation batch size per device

"per_device_train_batch_size": 4, # Set training batch size per device

"remove_unused_columns": True, # Remove columns not used by the model from the dataset

"save_steps": 100, # Save the model checkpoint every 100 steps

"save_total_limit": 1, # Limit the total number of saved checkpoints to 1 to save disk space

"seed": 0, # Set a seed for reproducibility

"gradient_checkpointing": True, # Enable gradient checkpointing to save memory during training

"gradient_checkpointing_kwargs": {"use_reentrant": False}, # Disable reentrant execution for checkpointing

"gradient_accumulation_steps": 1, # Do not accumulate gradients; update model weights every step

"warmup_ratio": 0.2, # Use a warmup phase for 20% of the training duration to stabilize the learning rate initially

}

Also worth reading:

Fine-Tuning LLaMA 3 At 2x Speed With Unsloth And ALPACA

Newsletter