Streamlining the Training and Inference of LLaMA 3

This is a comprehensive guide on fine-tuning Llama 3. Whether you’re aiming to improve the accuracy of the recently announced model by Meta or dapt it for specific tasks, the process of fine-tuning can enhance the model’s performance for your application.

In this implementation, we use the Unsloth library, enabling up to 2x speedier training and inference when fine-tuning Llama 3. Additionally, we leverage ALPACA for efficient data preparation.

The process involves adjusting Llama’s pre-trained parameters to better align with specific data or tasks. This practice helps the model perform more effectively in targeted contexts.

This guide features beginner-friendly code and clear commentary to ensure that even those new to machine learning can follow along. Furthermore, we’ll offer the complete end-to-end implementation in Google Colab.

Throughout this guide, we’ll explore how to set the environment, prepare the dataset, configure and fine-tune the model with LoRA, and deploy it for production use using Hugging Face. Moreover, each section is structured to build upon the last, offering a cohesive learning experience.

1. Models and Tools

1.1 Llama 3 Model Overview

Meta AI recently launched the Llama 3 model series, with configurations of 8 billion and 70 billion parameters, and plans for a future 400 billion parameter model.

This development highlights Meta’s push in open-source AI innovation, introducing capabilities for longer context windows and enhanced performance.

Llama 3 models, trained on 15 trillion tokens, are designed for high-quality, efficient performance, achieving notable benchmarks across various datasets. Read more about Llama 3 on Meta’s announcement article and get started with the model using the model with the article below.

Getting Started With LLama 3: The Most Advanced Open-Source Model

1.2 Unsloth Library

The Unsloth library is engineered to accelerate the fine-tuning of large language models like Llama 3, achieving up to 5x faster processing and 80% less memory usage compared to traditional methods.

It supports various models across different platforms, including single GPU setups, and integrates techniques like 4-bit and 16-bit LoRA (Low-Rank Adaptation) to optimize performance without sacrificing accuracy.

Unsloth is therefore a powerful tool for developers looking to enhance the speed and efficiency of their machine learning workflows. Detailed insights into Unsloth’s features are available on Unsloth’s GitHub page.

1.3 ALPACA for Data Preparation

ALPACA is employed specifically to enhance the data preparation phase for fine-tuning Llama 3. Furthermore, it structures training data by setting up templates that include clear instructions, context, and expected responses. This structuring is needed for fine-tuning llama 3 to handle tasks that require understanding and generating text based on specific instructions.

2. Setting Up the Environment

Check that your computer or server has a suitable GPU for machine learning tasks. Fine-tuning language models is resource-intensive, and having a GPU will significantly speed up the process.

We will utilize the Google Colab T4 GPU, which is available for free. For the full code implementation using the T4 GPU with Google Colab, please refer to the link provided later in the article.

2.1 Install Necessary Libraries

torch: It provides extensive tools and functions for building deep learning models, including comprehensive support for tensor operations and automatic differentiation.datasets transformers: These are two separate packages from the Hugging Face ecosystem. Thedatasetslibrary is designed for easily loading and processing datasets in a standardized way, facilitating both training and evaluation phases of model development. Thetransformerslibrary offers access to pre-trained models such as BERT, GPT, and more, which can be fine-tuned for a wide variety of NLP tasks.unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git: This installs the Unsloth library directly from its GitHub repository. Unsloth is specialized for optimizing the training and inference phases of machine learning models, particularly those in the Hugging Face Transformers library. It likely includes enhancements for faster computation and reduced resource consumption, although specific details would depend on the library’s documentation.xformers<0.0.26,trl,peft,accelerate,bitsandbyteswithout dependencies:xformers: part of Facebook’s PyTorch extensions. This library provides efficient transformers implementations, focusing on attention mechanisms like Flash Attention which can significantly speed up processing times.trl: Refers to a library involved with transformer-based reinforcement learning or similar tasks.peft: This is Hugging Face’s library for Parameter-Efficient Fine-Tuning, which helps in fine-tuning models in a resource-efficient manner.accelerate: This library from Hugging Face is designed to simplify running machine learning models on multi-GPU or multi-TPU setups, making it easier to scale up computations.bitsandbytes: Typically used for low-level optimizations, such as custom CUDA kernels for training neural networks more efficiently, possibly in terms of memory or computational speed.

%%capture

# Install Unsloth, Xformers for Flash Attention, torch, and huggingface related libraries

!pip install torch

!pip install datasets transformers

!pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

!pip install --no-deps "xformers<0.0.26" trl peft accelerate bitsandbytes

3. Load Pre-trained LLama 3 Model

3.1 Load Model and Set Initial Parameters

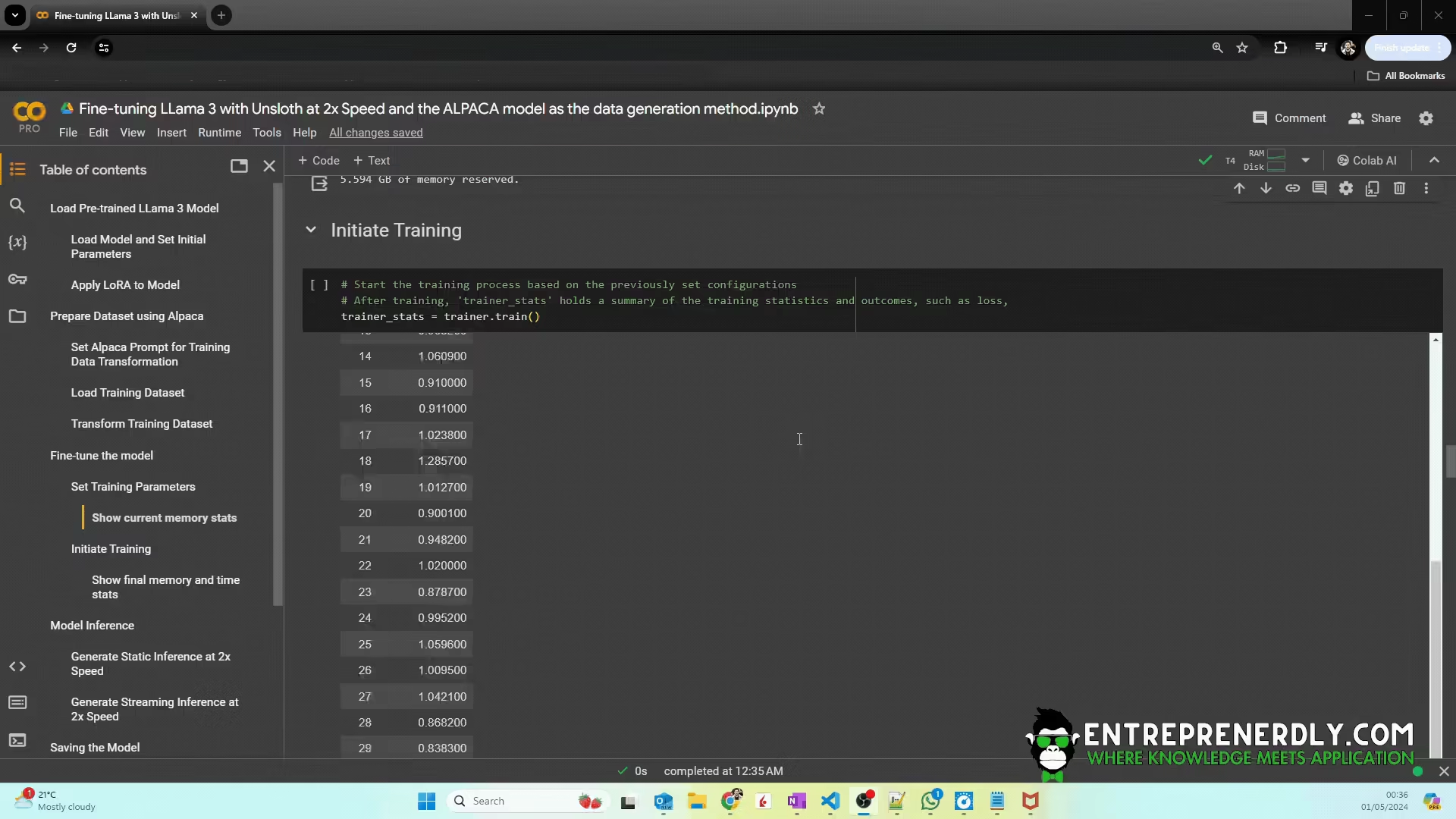

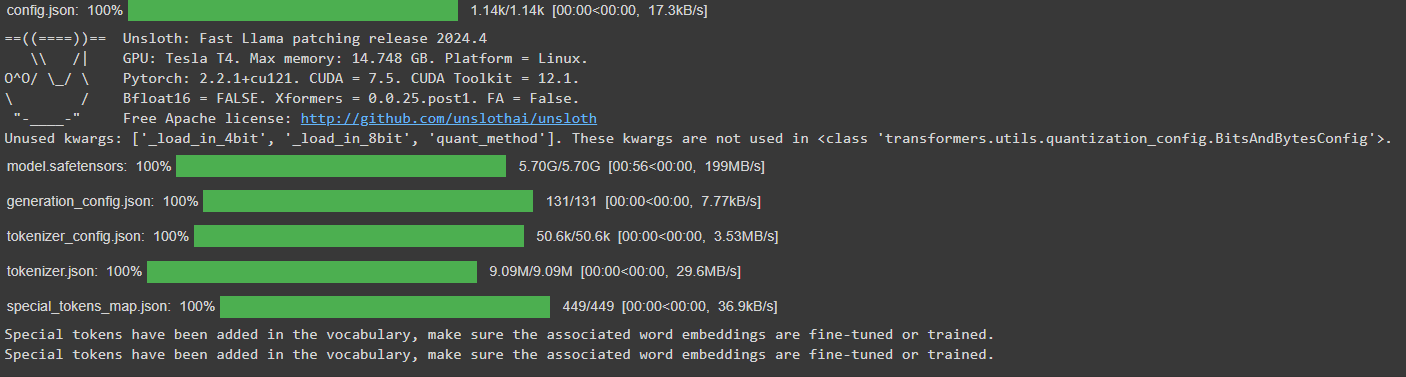

Fine-tuning Llama 3 begins by loading a pre-trained version using the Unsloth library, which ensures efficient handling and processing through the FastLanguageModel class.

The FastLanguageModel class streamlines the loading and initialization of pre-trained models. It supports advanced features such as Rotational Position Encoding (RoPE) (implemented via kaiokendev’s method). RoPE enhances the model’s understanding of token positional relationships, needed for tasks involving complex sequence dependencies.

Additionally, the class allows for the implementation of 4-bit quantization, significantly reducing the model’s memory footprint without compromising computational speed.

This feature is particularly valuable when deploying large models on systems with limited memory resources, ensuring that performance remains optimal even under hardware constraints.

from unsloth import FastLanguageModel

import torch

# This parameter specifies the maximum length of the input sequences

max_seq_length = 2048 # Choose any. Unsloth support RoPE Scaling internally via https://kaiokendev.github.io/til. (Technique used to enhance the model's understanding of token positions within sequences)

# This parameter defines the data type of the model’s weights and computational operations. If set to None, it implies automatic detection based on the available hardware.

dtype = None # None for auto detection. Float16 for Tesla T4, V100, Bfloat16 for Ampere+

# This parameter indicates whether the model should use 4-bit quantization for its operations and storage.

load_in_4bit = True # Use 4bit quantization to reduce memory usage. Can be False.

# pre-trained model and its associated tokenizer are being loaded using the FastLanguageModel.from_pretrained method from the Unsloth library.

# This method is designed to initialize the model and tokenizer with specific configurations tailored to optimize performance and resource utilization.

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/llama-3-8b-bnb-4bit",

max_seq_length = max_seq_length,

dtype = dtype,

load_in_4bit = load_in_4bit)

3.2 Apply LoRA to Model

Low-Rank Adaptation (LoRA) is a cutting-edge technique utilized to enhance pre-trained models efficiently, specifically by adapting them without the need for extensive retraining. Here’s how LoRA is applied using the Unsloth library:

LoRA optimizes the fine-tuning process by introducing low-rank matrices that modify specific parts of the model’s weight matrices, such as those in the attention and feed-forward layers. This technique allows for the addition of trainable parameters that capture task-specific nuances without altering the entire network’s structure.

The application of LoRA involves defining the rank (r) for these adaptations, which directly influences the complexity and capacity of the parameter modifications. A higher rank allows for more detailed adaptations but at a cost of increased computational resources.

For example, setting r = 16 provides a balance between performance and efficiency. The FastLanguageModel.get_peft_model function from Unsloth applies these settings to the model, incorporating additional configurations like dropout rates and bias settings to further refine the fine-tuning process.

Lastly, the use of gradient checkpointing as part of Unsloth’s optimizations further reduces memory usage, often enabling training configurations that use up to 30% less VRAM and fitting larger batch sizes, effectively doubling the speed of model training and adaptation.

# Enhance the pre-trained model with specific low-rank adaptations using the Unsloth library's PEFT (Parameter Efficient Fine-Tuning) tools.

model = FastLanguageModel.get_peft_model(

model, # The original loaded model to be enhanced.

# 'r' defines the rank for the low-rank adaptations. This rank determines the complexity and the capacity of the parameter modifications

# A higher rank allows for more complex adaptations but increases computational cost.

r = 16, # Recommended values are 8, 16, 32, 64, 128 based on the desired trade-off between performance and efficiency.

# Target specific model layers for adaptations. These layers are typically where the model's computations are most intensive.

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj", # Query, Key, Value, and Output projections in the attention mechanism.

"gate_proj", "up_proj", "down_proj"], # Additional layers that might benefit from parameter efficiency improvements.

# 'lora_alpha' sets the scaling factor for learning rates specifically applied to the LoRA parameters.

# This allows these parameters to learn at a different pace from the rest of the model.

lora_alpha = 16,

# 'lora_dropout' specifies the dropout rate for the LoRA layers. Supports any, but setting this to 0 optimizes the model by keeping all adaptations active during training.

lora_dropout = 0,

# 'bias' configures whether biases are included in the low-rank adapted layers. Supports any, but excluding biases ('none') can reduce overfitting and model complexity.

bias = "none",

# 'use_gradient_checkpointing' saves memory during training by storing only a subset of intermediate activations and recomputing the rest as needed.

# "unsloth" is a custom setting tailored to further optimize memory usage, particularly effective for long contexts.

# "unsloth" uses 30% less VRAM, fits 2x larger batch sizes.

use_gradient_checkpointing = "unsloth",

# 'random_state' ensures reproducibility by fixing the random number generation used in the adaptations.

random_state = 3407,

# 'use_rslora' indicates whether to use Rank Stabilized LoRA, which helps in maintaining the effectiveness of the low-rank adaptations over time.

use_rslora = False,

# 'loftq_config' allows for additional quantization configurations which can further reduce the model's memory footprint, though it's not used here.

loftq_config = None,

)

Unsloth 2024.4 patched 32 layers with 32 QKV layers, 32 O layers and 32 MLP layers.

4. Prepare Dataset Using Alpaca

4.1 Alpaca Prompt for Data Transformation

Proper dataset preparation is key for effective fine-tuning. ALPACA structures training data to enhance the model’s learning.

Set up the ALPACA prompt to format the dataset into a consistent structure that includes instructions, context, and expected responses.

This structured approach aids the model in understanding and responding accurately, producing better learning outcomes.

Also worth reading:

Getting Started With LLama 3: The Most Advanced Open-Source Model

Newsletter