An Open State-of-the-Art Vision-Language Foundation Model

Idefics2, the latest iteration, builds on the success of Idefics1 with enhanced Optical Character Recognition (OCR) abilities, improved architecture, and superior performance on Visual Question Answering (VQA) benchmarks. With 8 billion parameters and an open Apache 2.0 license, Idefics2 provides a strong foundation for processing documents like invoices, financial statements, and scanned reports. Fine-tuning Idefics further can be crucial for domain-specific tasks.

In testing, Idefics2 8B has demonstrated outstanding performance, often surpassing much larger models such as LLava-Next-34B and MM1-30B-chat. It consistently ranks at the top of its class size in VQA benchmarks. Moreover, this article explores the fine-tuning process of Idefics2, detailing the type of dataset required, the training strategies, and the significant improvements achieved.

2. Understanding Idefics2

The original paper published by the creators of Idefics2 discusses the construction and evaluation of vision-language models (VLMs), emphasizing critical design decisions and their justifications.

The authors highlight that many choices in VLM design lack experimental backing, making it challenging to identify factors that enhance model performance.

To address this, the authors conducted extensive experiments covering pre-trained models, architecture choices, data, and training methods, culminating in the development of the Idefics2 VLM, which achieves state-of-the-art performance within its size category.

2.1 Key Findings

Model Architecture:

- Cross-Attention vs. Fully Autoregressive:

- Cross-attention performs better when unimodal pre-trained backbones are frozen.

- Fully autoregressive architecture outperforms cross-attention when backbones are trainable.

- Unfreezing pre-trained backbones in fully autoregressive models can lead to training instability, which can be mitigated using Low-Rank Adaptation (LoRA).

- Cross-Attention vs. Fully Autoregressive:

Pre-Trained Backbones:

- Quality of the language model backbone has a higher impact on performance than the vision backbone.

- Changing to a better language model yields significant performance boosts.

- Switching to a better vision encoder also improves performance, although less significantly.

Efficiency Gains:

- Reducing the number of visual tokens using learned pooling improves both computational efficiency and performance.

- Preserving the original aspect ratio and image resolution enhances training and inference efficiency without degrading performance.

Trade-offs Between Compute and Performance:

- Splitting images into sub-images during training improves performance, especially for tasks requiring high resolution, at the cost of higher computational demands.

2.2 Why Idefics is an important advancement

Idefics latest iteration integrates several enhancements that make it a powerful tool for a wide range of applications. These advancements are rooted in the model’s ability to process and understand multimodal data—combining text and images.

Enhanced Optical Character Recognition (OCR)

One of the standout features of Idefics2 is its enhanced OCR capabilities. This improvement enables the model to accurately transcribe text from images and documents, such as invoices, financial statements, and scanned reports.

Furthermore, the integration of advanced OCR technology allows for the automation of data entry, reducing manual labor and minimizing errors in financial reporting.

Improved Architecture

By utilizing a fully autoregressive design, the model can handle more complex tasks with higher accuracy. This architecture outperforms the cross-attention method when the pre-trained backbones are trainable, offering significant stability during training with Low-Rank Adaptation (LoRA).

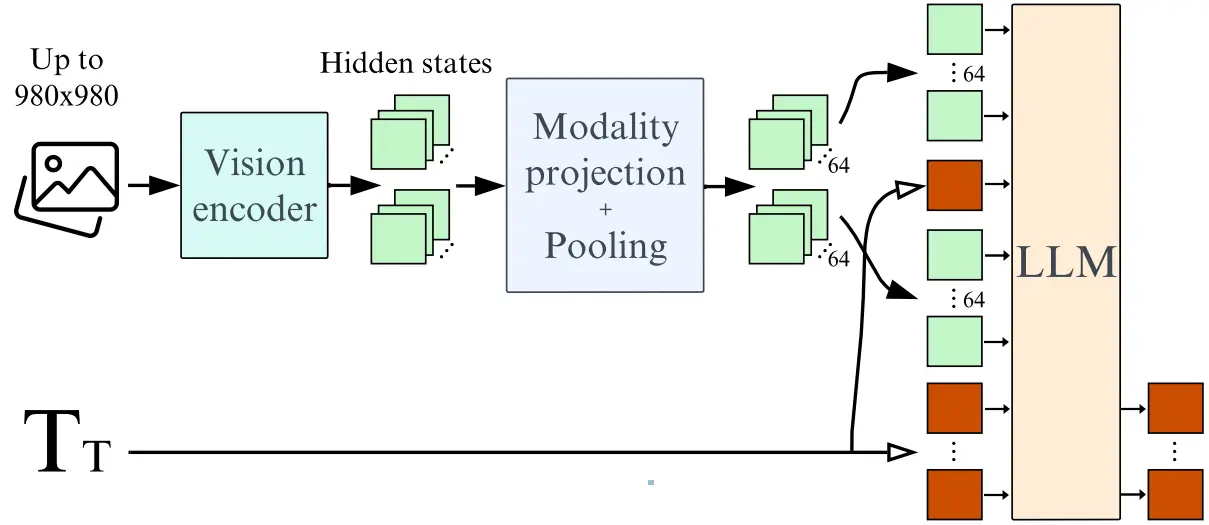

These architectural improvements contribute to Idefics2’s ability to deliver state-of-the-art results in various benchmarks, including Visual Question Answering (VQA). The model processes images up to 980×980 resolution, and its architecture involves a vision encoder, modality projection, pooling, and integration with a large language model:

Figure. 1: Idefics2's fully autoregressive architecture processes input images using the vision encoder. The visual features generated are then mapped (and optionally pooled) into the LLM input space to create visual tokens, with 64 tokens being standard. These visual tokens are concatenated (and potentially interleaved) with the text embeddings (shown as green and red columns). This combined sequence is fed into the language model (LLM), which then predicts the output text tokens.

Robust Training and Fine-Tuning

The training process for Idefics2 involved large-scale datasets, such as OBELICS for interleaved web documents and LAION-COCO for image-caption pairs. This comprehensive pre-training allows the model to understand and interpret diverse data formats.

Additionally, the model was fine-tuned using the Cauldron, a collection of 50 manually curated datasets designed for multi-turn conversations. This fine-tuning process tailored Idefics2 for specific tasks, enhancing its performance in text transcription, document understanding, and other domain-specific applications.

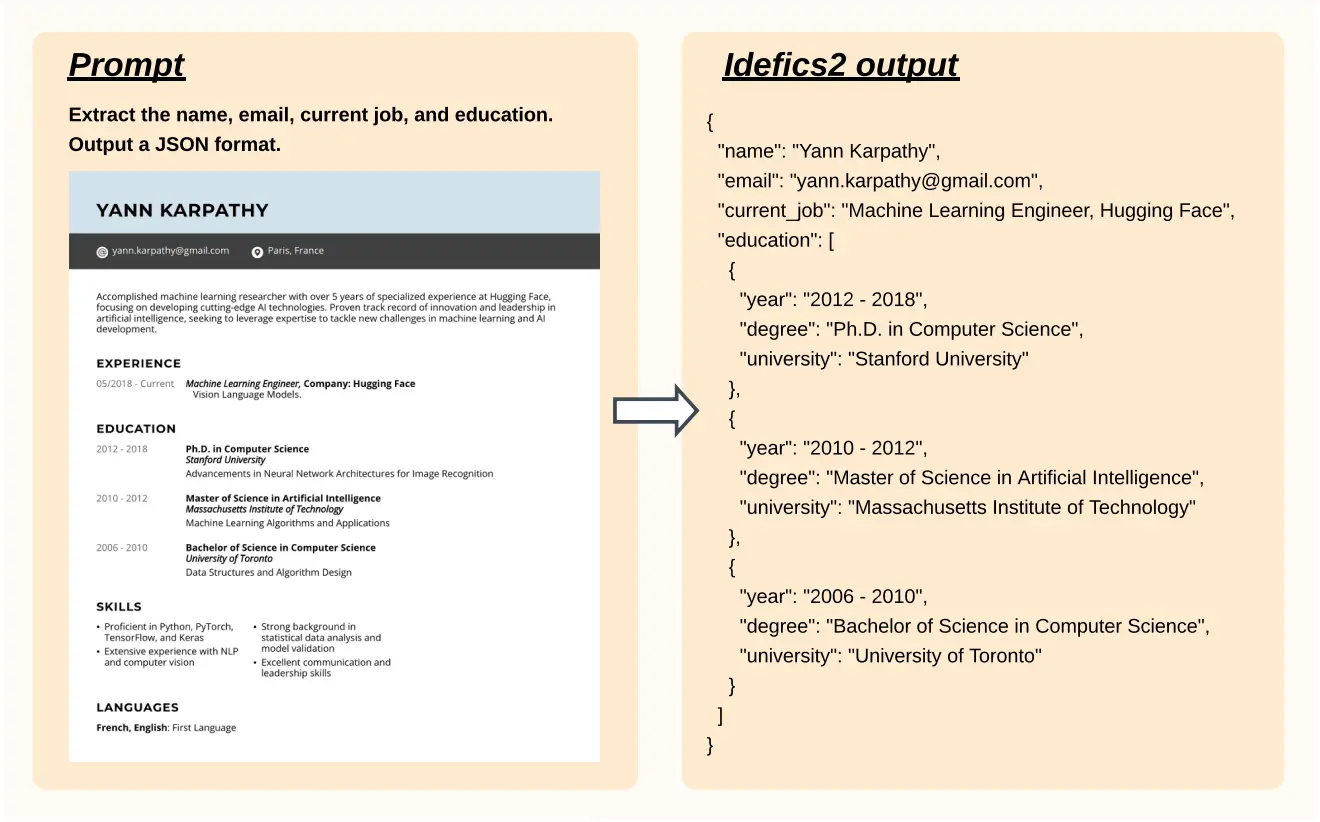

For example, the following prompt and response demonstrate Idefics2’s capability to analyze and extract structured information from a resume:

Figure. 2: Idefics2-chatty retrieves the requested information from the resume and arranges it in JSON format.

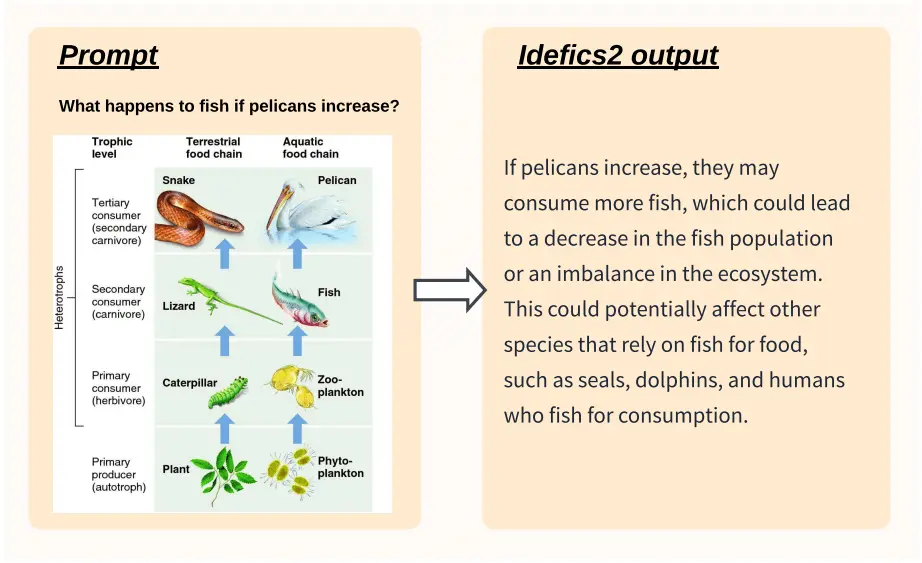

In another instance, Idefics2 can interpret ecological food chain scientific diagrams and generate accurate textual explanations:

Figure. 3: Idefics2-chatty responds to a question about a scientific diagram.

Performance on Benchmarks

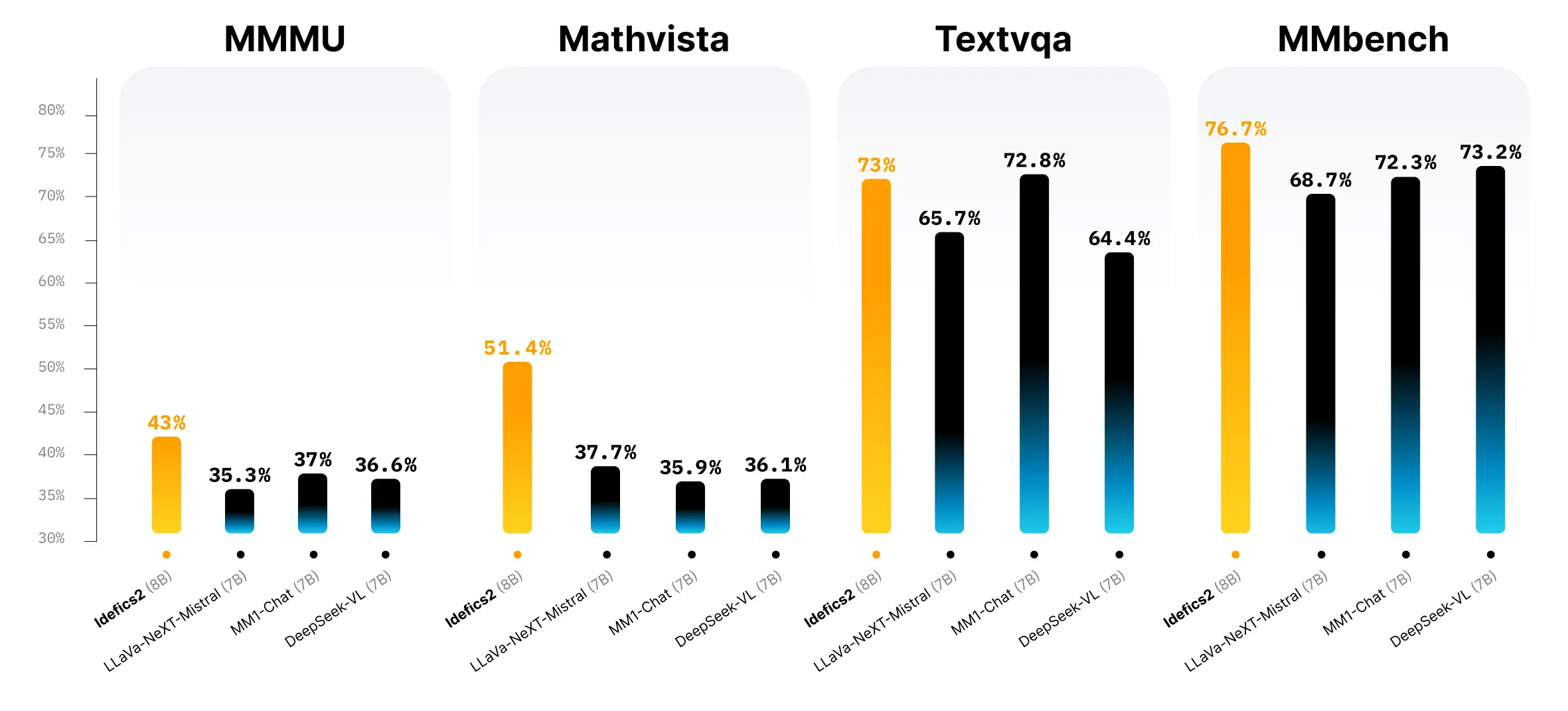

Idefics2 has demonstrated exceptional performance on several benchmarks, often surpassing larger models like LLava-Next-34B and MM1-30B-chat.

Key findings from the paper reveal that Idefics2 consistently ranks at the top of its class size in Visual Question Answering benchmarks.

In detailed evaluations, Idefics2 outperformed other models across various benchmarks such as MMMU, MathVista, TextVQA, and MMBench. The chart below illustrates Idefics2’s performance:

Figure. 4: Performance evaluation of Idefics2 compared to other models across various benchmarks, including MMMU, MathVista, TextVQA, and MMBench.

Versatility and Accessibility

With 8 billion parameters and an open Apache 2.0 license, Idefics2 is designed to be accessible and versatile. Moreover, It is integrated into the Hugging Face Transformers library, making it straightforward to fine-tune for a wide range of multimodal applications.

Whether for automating financial workflows, enhancing data analysis, or improving customer service interactions, Idefics2 offers a scalable solution that meets diverse needs.

3. Fine-Tuning Process in Python

Fine-tuning Idefics2 involves several key steps to adapt the model for specific use cases. This section will outline the fine-tuning process for Document Question Answering, including setup, model preparation, data handling, training, and evaluation.

3.1 Setup and Environment Preparation

Install essential packages and log into the Hugging Face platform to access the model and datasets.

!pip install -q git+https://github.com/huggingface/transformers.git

!pip install -q accelerate datasets peft bitsandbytes

from huggingface_hub import notebook_login

notebook_login()

from google.colab import drive

drive.mount('/content/drive')

3.2 Loading the Pre-trained Model and Dataset

The next step in fine-tuning Idefics2 involves loading the pre-trained model and the appropriate dataset. We use the Hugging Face hub to access the Idefics2 model, specifically the idefics2-8b variant.

Loading the Pre-trained Model

To load the pre-trained Idefics2 model, we will use the Hugging Face transformers library. The model will be configured to handle various fine-tuning strategies, ensuring it can be adapted to different computational resources.

Configuring the Model

The model can be fine-tuned using different strategies depending on the available computational resources:

- QLora: Quantized Low-Rank Adaptation, which allows training with 4-bit precision, reducing memory usage.

- Standard Lora: Standard Low-Rank Adaptation for efficient fine-tuning with minimal memory overhead.

- Full Fine-tuning: Full precision fine-tuning for the highest accuracy, suitable for systems with abundant resources like A100 or H100 GPUs.

import torch

from peft import LoraConfig

from transformers import AutoProcessor, BitsAndBytesConfig, Idefics2ForConditionalGeneration

# Specify the device to use for model training and inference (CUDA for GPU)

DEVICE = "cuda:0"

# Flags to indicate the use of Low-Rank Adaptation (LoRA) or Quantized LoRA (QLORA)

USE_LORA = False

USE_QLORA = True

# Load the pre-trained Idefics2 model processor from the Hugging Face hub

processor = AutoProcessor.from_pretrained(

"HuggingFaceM4/idefics2-8b",

do_image_splitting=False # Disable image splitting for simplicity

)

# Configure the model based on the flags for LoRA and QLORA

if USE_QLORA or USE_LORA:

# Define the configuration for LoRA

lora_config = LoraConfig(

r=8, # Rank of the LoRA updates

lora_alpha=8, # Scaling factor for the updates

lora_dropout=0.1, # Dropout rate for LoRA

target_modules='.*(text_model|modality_projection|perceiver_resampler).*(down_proj|gate_proj|up_proj|k_proj|q_proj|v_proj|o_proj).*$',

use_dora=False if USE_QLORA else True, # Disable DORA if using QLORA

init_lora_weights="gaussian" # Initialization method for LoRA weights

)

if USE_QLORA:

# Define the configuration for QLORA, including 4-bit quantization

bnb_config = BitsAndBytesConfig(

load_in_4bit=True, # Enable 4-bit quantization

bnb_4bit_quant_type="nf4", # Use NF4 quantization type

bnb_4bit_compute_dtype=torch.float16 # Use FP16 for computation

)

# Load the Idefics2 model with the specified LoRA or QLORA configurations

model = Idefics2ForConditionalGeneration.from_pretrained(

"HuggingFaceM4/idefics2-8b",

torch_dtype=torch.float16, # Use FP16 for model weights

quantization_config=bnb_config if USE_QLORA else None, # Apply QLORA config if enabled

)

# Add the LoRA configuration to the model and enable adapters

model.add_adapter(lora_config)

model.enable_adapters()

else:

# Load the Idefics2 model for full fine-tuning without LoRA or QLORA

model = Idefics2ForConditionalGeneration.from_pretrained(

"HuggingFaceM4/idefics2-8b",

torch_dtype=torch.float16, # Use FP16 for model weights

_attn_implementation="flash_attention_2", # Enable Flash Attention (only available on A100 or H100 GPUs)

).to(DEVICE) # Move the model to the specified device

Additionally, load the dataset you will use for fine-tuning. For this example, we’ll use a subset of the DocVQA dataset. See example below:

from datasets import load_dataset

train_dataset = load_dataset("nielsr/docvqa_1200_examples", split="train")

train_dataset = train_dataset.remove_columns(['id', 'words', 'bounding_boxes', 'answer'])

eval_dataset = load_dataset("nielsr/docvqa_1200_examples", split="test")

eval_dataset = eval_dataset.remove_columns(['id', 'words', 'bounding_boxes', 'answer'])

{'image': <PIL.JpegImagePlugin.JpegImageFile image mode=L size=1701x2386>,

'query': {'de': 'Wo ist das ITC Life Sciences and Technology Centre?',

'en': 'Where is the ITC Life Sciences and Technology Centre?',

'es': '¿Dónde está el Centro de Ciencias y Tecnología de la Vida del ITC?',

'fr': "Où se trouve le Centre des sciences de la vie et de la technologie de l'ITC?",

'it': "Dov'è l'ITC Life Sciences and Technology Centre?"},

'answers': ['bengaluru', 'Bengaluru', 'in Bengaluru']}

Figure. 5: Example Document Image in the evaluation dataset.

Newsletter