Implementation of a Fine-tuned YOLOv8 for Stock Price Patterns Identification

Candlestick pattern recognition is a useful tool in technical analysis for traders. However, the manual identification of these patterns is time-consuming and prone to human error. This has led to skepticism about the reliability and efficiency of traditional methods. Can machine learning technology offer a better solution?

In this article, we will explore how to use the YOLO (You Only Look Once) v8 model to identify these patterns. YOLO is a SOTA (state-of-the-art) object detection algorithm known for its speed and accuracy. Unlike traditional methods that require multiple passes through an image, YOLO predicts bounding boxes and class probabilities in a single evaluation, making it incredibly fast.

We will demonstrate how to implement a fine-tuned YOLOv8 model specifically designed for recognizing stock price patterns. This model has been trained on 9000 image dataset of candlestick charts with an overall training accuracy of 0.93. The model is capable of identifying key patterns like ‘Head and Shoulders,’ ‘Triangle,’ and ‘W-Bottom.’ What sets this approach apart is its ability to analyze both static images and live trading video data.

This article is structured as follows:

- What is YOLO and its value in Technical Analysis

- Python Implementation for Image and Video Inference

- Limitations and Concluding Thoughts

1. What is YOLO?

YOLO, which stands for “You Only Look Once,” is a cutting-edge object detection algorithm which has proven to be very effective in making predictions using a single evaluation.

The Core Idea of YOLO

YOLO approaches object detection as a single regression problem, straight from image pixels to bounding box coordinates and class probabilities. This differs fundamentally from traditional methods that require multiple stages of processing.

How YOLO Works

YOLO divides the input image into an 𝑆×𝑆 grid. Each grid cell predicts a fixed number of bounding boxes and confidence scores for those boxes. The confidence score reflects the accuracy of the bounding box and whether the bounding box contains a known object (regardless of the class).

For each bounding box, YOLO predicts:

- Coordinates (x, y): The center of the bounding box relative to the grid cell.

- Width (w) and Height (h): The dimensions of the bounding box, normalized by the image width and height.

- Confidence Score: 𝑃(object)×IoU_predtruth. This score reflects the probability that a box contains an object and how accurate the box is that it contains the object.

- Class Probabilities: Conditional class probabilities given that an object is present in the box.

Each grid cell thus predicts 𝐵 bounding boxes and class probabilities for each class. If the image contains 𝐶 classes, each prediction for a grid cell involves:

where 5 represents the 𝑥,𝑦,𝑤,ℎ coordinates and the confidence score.

Intersection over Union (IoU)

IoU is a key metric in YOLO for determining the quality of the predicted bounding boxes. It is defined as:

This value ranges from 0 to 1, where 1 indicates perfect overlap. During training, YOLO maximizes the IoU between the predicted boxes and the ground truth boxes.

Loss Function

YOLO’s loss function consists of three main components:

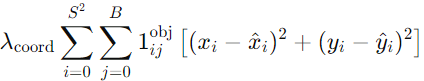

Localization Loss: Measures errors in the predicted bounding box coordinates. It is computed using the sum of squared errors.

Confidence Loss: Measures the accuracy of the confidence score prediction, using the sum of squared errors for the presence and absence of objects.

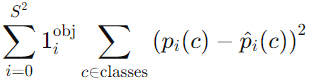

Class Probability Loss: Measures errors in the predicted class probabilities.

Where:

- λcoord and 𝜆noobj are weights to balance the loss terms.

- 1𝑖𝑗obj is an indicator function that denotes if the 𝑗-th bounding box in cell 𝑖 contains an object.

Advantages of YOLO

- Speed: YOLO processes images in real-time. It is capable of achieving high frame rates, making it suitable for applications requiring real-time detection.

- Accuracy: YOLO’s unified architecture means that it makes fewer background errors compared to other methods that look at regions of interest (RoI).

- Simplicity: YOLO frames object detection as a single regression problem, simplifying the pipeline.

Evolution from YOLOv1 to YOLOv9

- YOLOv1: Introduced the concept of single-stage detection.

- YOLOv2: Improved bounding box prediction and introduced anchor boxes.

- YOLOv3: Enhanced with deeper networks and feature pyramids for multi-scale detection.

- YOLOv4: Included various improvements like CSPDarknet backbone and PANet.

- YOLOv5: Introduced more efficient and flexible implementations.

- YOLOv6 to YOLOv8: Continued refinements in speed, accuracy, and ease of use, with better handling of small objects and improved training processes.

- YOLOv9: The latest iteration, YOLOv9, brings further enhancements in accuracy and efficiency. It introduces advanced techniques such as transformer-based attention mechanisms and improved feature pyramids, which significantly boost performance on complex datasets, including those used for candlestick pattern recognition.

Fine-Tuning YOLO

Fine-tuning YOLO involves adapting the pre-trained model to a specific dataset, for example to enhance its ability to recognize custom patterns like candlestick patterns in stock charts.

To fine-tune YOLO for candlestick pattern recognition, follow these steps:

- Prepare the Dataset: Gather and annotate a dataset of candlestick chart images. Ensure the dataset is diverse to cover various patterns and market conditions.

- Modify the Model: Adjust the model’s architecture and parameters to better suit the new task. This includes setting the number of classes and tweaking hyperparameters.

- Train the Model: Use the annotated dataset to train the model. This involves several epochs of training where the model’s weights are adjusted to minimize detection loss.

- Evaluate and Fine-Tune: Evaluate the model’s performance on a validation set. Fine-tune the model based on the results to improve accuracy and reduce overfitting.

For a detailed guide on fine-tuning object detection models, refer to this Colab notebook. It provides an example of fine-tuning YOLOS for object detection on a custom dataset.

2. Python Implementation

2.1 Setting Up the Environment

We will use the ultralytics library, which provides state-of-the-art implementations of the YOLO algorithm. The ultralyticsplus library offers additional utilities and enhancements. We also need OpenCV for image and video processing, and requests for handling HTTP requests.

# Install PyTorch, an open-source machine learning library

!pip install torch

# Install the specific version of ultralytics library for YOLO model

!pip install ultralytics==8.0.43

# Install the ultralyticsplus library for additional utilities

!pip install ultralyticsplus==0.0.28

# Install OpenCV, a library for computer vision tasks

!pip install opencv_python

# Install requests, a library for making HTTP requests

!pip install requests>=2.23.0

# Install the headless version of OpenCV for environments without display capabilities

!pip install opencv-python-headless

We then need to import the necessary libraries and functions:

import cv2

import requests

import os

from ultralyticsplus import YOLO, render_result

from google.colab.patches import cv2_imshow

from IPython.display import HTML, display

from base64 import b64encode

import gdown

import cv2

from google.colab.patches import cv2_imshow

Also worth reading:

Automating 61 Candlestick Trading Patterns In Python

Newsletter