Experience the Future of Intelligent Investing Today

Back To Top

Experience the Future of Intelligent Investing Today

Recently, advancements in artificial intelligence have paved the way for innovative applications in image editing. One such breakthrough is the utilization of Multimodal Large Language Models (MLLMs). These models guide instruction-based image editing, offering a new level of interactivity and precision.

Furthermore, Multimodal Large Language Models, like the one developed by Apple, are at the forefront of this technological evolution. These models combine textual and visual information to understand and execute complex editing instructions.

Consequently, the MGIE (MLLM-Guided Image Editing) approach leverages these models to interpret user commands and apply the desired changes to images. This method has shown remarkable improvements in various editing tasks. Such as Photoshop-style alterations to global photo optimization and local object adjustments.

Moreover, the MGIE framework not only enhances the quality of edits but also maintains competitive efficiency. This makes MGIE a practical solution for real-world applications. Its success is evident in its performance across different benchmarks, outperforming existing methods in both automatic metrics and human evaluations.

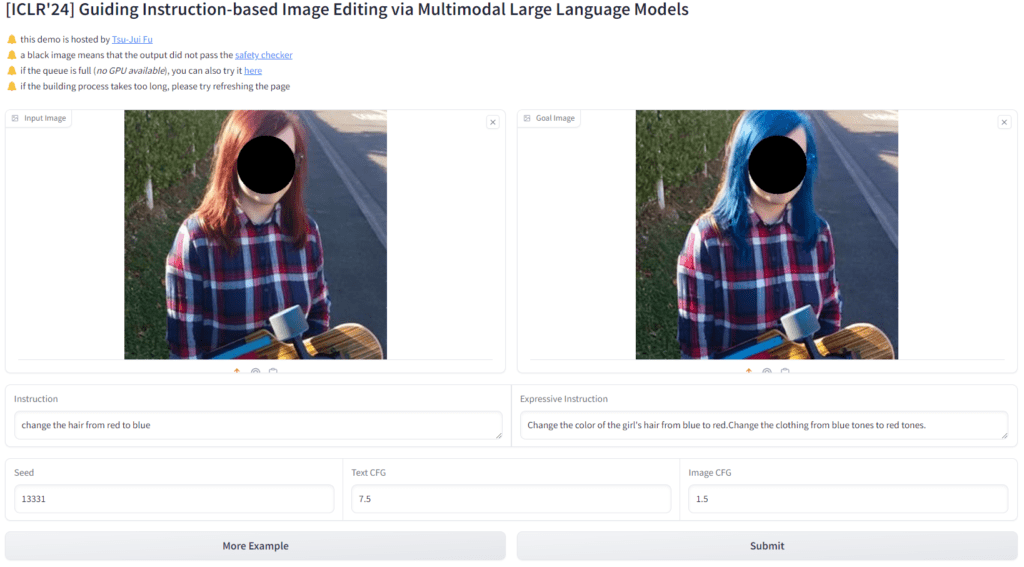

Figure. 1: Gradio app interface on a mobile device, showcasing the image upload feature and submission button ready for live user interaction.

Figure. 2: A side-by-side evaluation of InsPix2Pix, LGIE, and MGIE. MGIE effectively renders “lightning” and its reflection, while only MGIE removes the targeted Christmas tree without affecting the surroundings. In optimizing photos, unlike its counterparts, MGIE accurately brightens and sharpens the image. Lastly, MGIE skillfully applies glaze to only the donuts, avoiding the overapplication seen in other models.

Similarly, the proposed method, MLLM-Guided Image Editing (MGIE), leverages the expressive power of MLLMs to generate detailed instructions for image editing tasks.

MGIE is designed to handle a wide range of editing tasks, including Photoshop-style modifications, global photo optimization, and local object alteration.

If you want to play around with the model, please visit the Huggingface Space:

Figure 3. A screenshot of the Gradio web interface, a user-friendly platform for submitting image editing instructions and viewing the resulting transformations. Access the Gradio Playground here.

Building on the foundation of MLLMs, MGIE introduces a highly adaptable framework for executing intricate image transformations.

By harnessing the power of MLLMs, MGIE is able to comprehend and implement nuanced editing instructions. This allows for a seamless translation of user commands into visually stunning outcomes.

This innovative approach not only streamlines the editing process but also consequently opens up new possibilities for creative expression in digital imagery.

With MGIE, users can expect a more intuitive and efficient editing experience, revolutionizing the way we interact with and manipulate images in the digital age.

Figure. 4: Overview of MLLM-Guided Image Editing (MGIE), which leverages MLLMs to enhance instruction-based image editing. MGIE learns to derive concise expressive instructions and provides explicit visual-related guidance for the intended goal. The diffusion model jointly trains and achieves image editing with the latent imagination through the edit head in an end-to-end manner. and show the module is trainable and frozen, respectively.

Additionally, the model depicted in the image represents a sophisticated approach to instruction-based image editing using Multimodal Large Language Models (MLLMs).

At its core, the process begins with an input instruction and an image. The MLLM, equipped with summarization capabilities (Summ), distills the verbose instruction into a more focused expressive instruction.

This is then fed into the MLLM along with an adapter and an embedding module, which prepares it for image transformation.

Subsequently, the visual tokens generated from this process are routed through an Edit Head that interfaces with a Diffusion model.

The Diffusion model, responsible for the heavy lifting of pixel manipulation, takes cues from the processed instructions to produce the final edited image that aligns with the user’s initial request.

This innovative pipeline showcases the potential of leveraging language models to intuitively guide and execute complex image editing tasks, a testament to the evolving intersection of AI and creative digital media.

You can read the entire paper below or by visiting the arXiv publication.

Powered By EmbedPress

ICLR Paper: The ICLR 2024 conference paper, featuring an abstract and introductory figures that present the guiding principles of instruction-based image editing via MLLMs. You can find the paper here.

Newsletter