A Step-by-Step Guide to Optimizing LayoutLMv2 for Enhanced Domain-Specific Document Question Answering Efficiency

Document question answering (DQA) plays a key role in various tasks, allowing us to efficiently retrieve information from documents. However, traditional DQA models, including those not leveraging LayoutLMv2, often face challenges with complex document structures and visual elements.

To address this challenge, Microsoft’s LayoutLMv2 has become as a strong tool, offering improved DQA capabilities by incorporating layout understanding. This article provides a step by step guide for fine-tuning LayoutLMv2 for domain-specific DQA tasks.

We’ll delve into the model’s architecture, establish the necessary technical background, and walk through each step — from environment setup to training your customized DQA model.

By leveraging LayoutLMv2’s ability to process both text and layout information, and its OCR-enhanced understanding, we’ll gain the ability to build DQA models that excel at extracting information from specific document types.

This article is structured as follows:

- Understanding LayoutLMv2

- Preparing the Dataset

- Model Fine-tuning

- Inference and Evaluation

1. Understanding LayoutLMv2

LayoutLMv2 is a state-of-the-art pre-trained model designed for visually-rich document understanding tasks. It builds upon its predecessor, LayoutLM, by introducing novel pre-training objectives and architectural enhancements that significantly improve its ability to process both textual content and document layout information.

1.1 Multimodal Architecture:

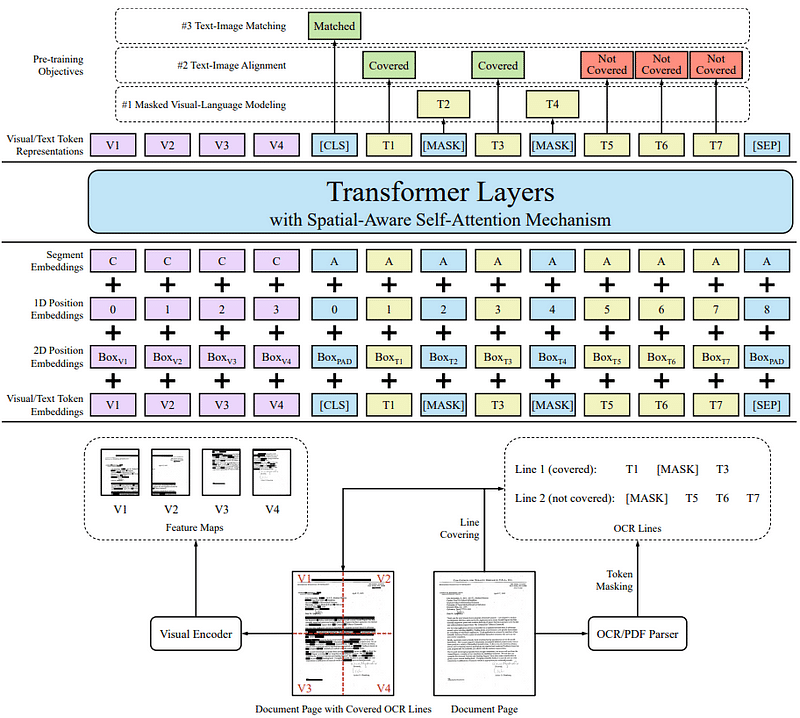

The key advancement in LayoutLMv2 lies in its two-stream multimodal Transformer architecture. This architecture processes textual and visual (image) modalities separately but in parallel, allowing for a deeper cross-modal interaction. The model is pre-trained on tasks designed to enhance the interaction among text, layout, and image features, including:

- Masked Visual-language Modeling (MVLM): A continuation of the masked language modeling (MLM) objective, adapted to handle visual features by masking text and predicting the masked words based on the unmasked context and visual features.

- Text-image Alignment (TIA): A novel pre-training task aiming to align text tokens with their corresponding image regions, enhancing the model’s ability to link visual features with text.

- Text-image Matching (TIM): Ensuring the model learns to match text content with its corresponding document image, reinforcing the association between text and visual modalities.

1.2 Spatial-Aware Self-Attention:

An innovative aspect of LayoutLMv2 is the introduction of a spatial-aware self-attention mechanism. This mechanism incorporates 2-D relative position embeddings that allow the model to understand the spatial relationship among text blocks within a document better.

Unlike traditional self-attention, which primarily focuses on the sequence nature of text, spatial-aware self-attention leverages the layout information, enriching the model’s contextual understanding with spatial semantics.

Figure. 1: Diagram of LayoutLMv2 Architecture Illustrating Pre-training Objectives, Input Embeddings, and Transformer Layers with Spatial-Aware Self-Attention Mechanism for Document Understanding and OCR Processing. Source: LayoutLMv2 paper.

1.3 Advantages for VQA

1.3.1 Integration of Visual Information:

While LayoutLM integrates textual and layout information, LayoutLMv2 extends this by directly incorporating visual features from document images into the pre-training phase.

This early integration allows LayoutLMv2 to learn cross-modal representations, improving its understanding of the document as a whole.

1.3.2 Enhanced Pre-training Tasks

The introduction of TIA and TIM tasks in LayoutLMv2 pre-training strategy is particularly beneficial for VQA tasks.

By aligning text with image regions and ensuring the model can match document images with their textual content, LayoutLMv2 is better equipped to handle queries that require an understanding of visual elements (e.g., charts, tables, logos) in addition to text.

1.3.3 Improved Spatial Understanding

The spatial-aware self-attention mechanism gives LayoutLMv2 an edge in understanding the spatial layout of documents. This is important for VQA tasks where the answer might depend on the spatial arrangement of text and visual elements (e.g., “What’s the item listed at the top-right corner?”).

2. Prerequisites

Before starting with the fine-tuning process, ensure you have the following software and libraries installed.

In a Google Colab notebook, run the following commands to install the necessary libraries.

- Transformers Library: The Hugging Face Transformers library provides access to pre-trained models including LayoutLMv2.

- Detectron2: used for processing images to extract layout and visual features, crucial for understanding document images.

- Tesseract-OCR: Tesseract is an OCR engine used for converting images into editable text.

!pip install 'git+https://github.com/facebookresearch/detectron2.git'

!pip install torchvision

!sudo apt install tesseract-ocr

!pip install -q pytesseract

!pip install datasets

!pip install accelerate

!pip install transformers

!pip install huggingface_hub

!pip install datasets

3. Preparing the Dataset

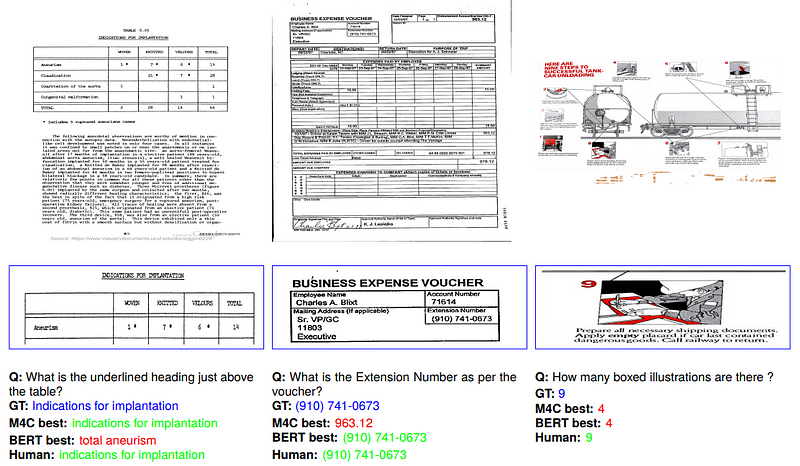

We will be Loading and preprocessing the DocVQA dataset for finetuning LayoutLMv2. More information can be found on the original publication.

Figure. 2: Sample Challenges and Results from the DocVQA Dataset Publication, Showcasing the Comparison of Model Performance (M4C and BERT) Against Ground Truth (GT) and Human Performance in Identifying Text and Numerical Data within Various Document Layouts.

1. Initializing the Processor: Before starting with data preprocessing, initialize the AutoProcessor with your model checkpoint. This processor will be used to process images and encode the data:

from transformers import AutoProcessor

# Assuming you've defined your model checkpoint as the LayoutLMv2 you intend to fine-tune

model_checkpoint = "microsoft/layoutlmv2-base-uncased"

# Initialize the processor

processor = AutoProcessor.from_pretrained(model_checkpoint)

Having the processor initialized at this point is essential because it is used in subsequent steps to:

- Convert document images into model-compatible pixel values

- Extract words and their bounding boxes using OCR capabilities integrated into the processor

- Encode text data along with its spatial layout information for the model

2. Load the DocVQA Dataset: The first step involves loading the DocVQA dataset. We use the datasets library for this purpose:

# Load the dataset

dataset = load_dataset("nielsr/docvqa_1200_examples")

print(dataset["train"].features)

{'id': Value(dtype='string', id=None),

'image': Image(decode=True, id=None),

'query': {'de': Value(dtype='string', id=None),

'en': Value(dtype='string', id=None),

'es': Value(dtype='string', id=None),

'fr': Value(dtype='string', id=None),

'it': Value(dtype='string', id=None)},

'answers': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None),

'words': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None),

'bounding_boxes': Sequence(feature=Sequence(feature=Value(dtype='float32', id=None), length=4, id=None), length=-1, id=None),

'answer': {'match_score': Value(dtype='float64', id=None),

'matched_text': Value(dtype='string', id=None),

'start': Value(dtype='int64', id=None),

'text': Value(dtype='string', id=None)}} Visualise a single document image

import matplotlib.pyplot as plt

import matplotlib.patches as patches

from PIL import Image

document = dataset['train'][0]

# Display the document image

image = document['image'] # This assumes the image is already in a PIL.Image format

plt.figure(figsize=(10, 10))

plt.imshow(image)

plt.axis('off') # Hide axis ticks and labels

# Overlay bounding boxes and text

ax = plt.gca() # Get the current Axes instance

for word, box in zip(document['words'], document['bounding_boxes']):

# Create a Rectangle patch

rect = patches.Rectangle((box[0], box[1]), box[2] - box[0], box[3] - box[1], linewidth=1, edgecolor='r', facecolor='none')

ax.add_patch(rect)

# Annotate text

plt.text(box[0], box[1], word, fontsize=8, color='blue', va='top')

plt.show()

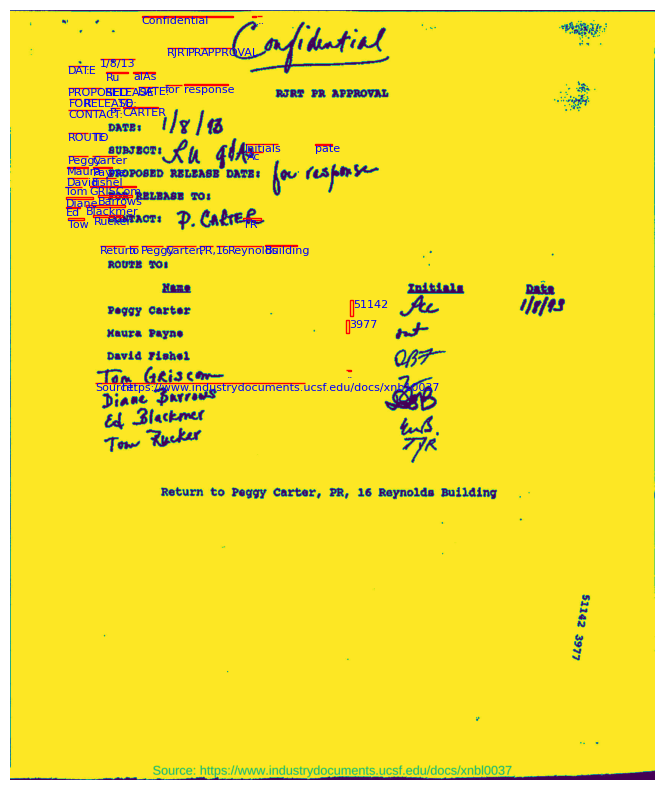

Figure. 3: Example Document in the Training Data.

3. Dataset Preprocessing: After loading the dataset, it’s necessary to preprocess it. This preprocessing step involves filtering the dataset for English questions and simplifying the answers format:

# Filter and refine the dataset

updated_dataset = dataset.map(lambda example: {"question": example["query"]["en"]}, remove_columns=["query"])

updated_dataset = updated_dataset.map(lambda example: {"answer": example["answers"][0]}, remove_columns=["answer", "answers"])

# Remove OCR-related columns

updated_dataset = updated_dataset.remove_columns(["words", "bounding_boxes"])

4. Integrating OCR Data: To utilize both the textual and visual information present in the documents, we apply OCR to process images and extract text along with their bounding boxes:

from transformers import AutoProcessor

model_checkpoint = "microsoft/layoutlmv2-base-uncased"

processor = AutoProcessor.from_pretrained(model_checkpoint)

def get_ocr_words_and_boxes(examples):

images = [processor.image_processor(image.convert("RGB")) for image in examples["image"]]

encoded_inputs = processor(images=images, return_tensors="pt")

examples["image"] = encoded_inputs["pixel_values"]

examples["words"] = encoded_inputs["words"]

examples["boxes"] = encoded_inputs["boxes"]

return examples

# Process the dataset to include OCR data

dataset_with_ocr = updated_dataset.map(get_ocr_words_and_boxes, batched=True, batch_size=2)

5. Encoding the Dataset for Model Input: Next, we encode the dataset to format it correctly for LayoutLMv2. This involves finding the positions of answers within the text and encoding questions, answers, and document images:

Related Articles

Fine-Tuning StarCoder To Customize A Coding Assistant

Newsletter