Using Llama to analyze Earnings Transcripts, 10-Q Fillings and Quarterly Investor Presentations

In this article, we explore the implementation of Meta’s Llama 3.1 for analyzing earnings announcements using Groq’s fast inference capabilities. Llama 3.1, the latest in Meta’s series of large language models, offers enhanced performance and accessibility with models up to 405 billion parameters.

We use this advancement for streamlined due diligence of earnings transcripts, 10-Q and quarterly investor presentations. We’ll use Tesla’s Q2 Earnings Report documents as example. Additionally, we’ll use Llama 3.1 to generate and answer questions for the due diligence alongside standard questions. An end-to-end Notebook will be provided for immediate implementation.

This article is structured as follows:

- Retrieve earnings transcripts, 10-Q reports, and investor presentations.

- Preprocess and ingest data for vector index storage.

- Query the engine for summaries and key insights.

- Generate due diligence questions using Llama 3.1.

- Perform due diligence on raw data with Llama.

- Produce the final due diligence report.

1. Quarterly Earnings Announcements Reports

Quarterly earnings announcements have a significant impact on market behavior, investor decision-making, and corporate transparency. These reports provide key metrics such as revenue, earnings per share (EPS), profit margins, and growth potential. Furthermore, Earnings reports also serve as a basis for comparing a company’s results to analyst expectations. The actual earnings reported can confirm or contradict these estimates, leading to adjustments in stock ratings and future growth projections.

Acquiring And Analyzing Earnings Announcements Data In Python

2. Meta’s LLama 3.1

Llama 3.1 is Meta’s latest and most advanced open-source large language model, released in July 2024. It is available in three versions with 8B, 70B, and 405B parameters. This makes it the largest openly available foundation model to date.

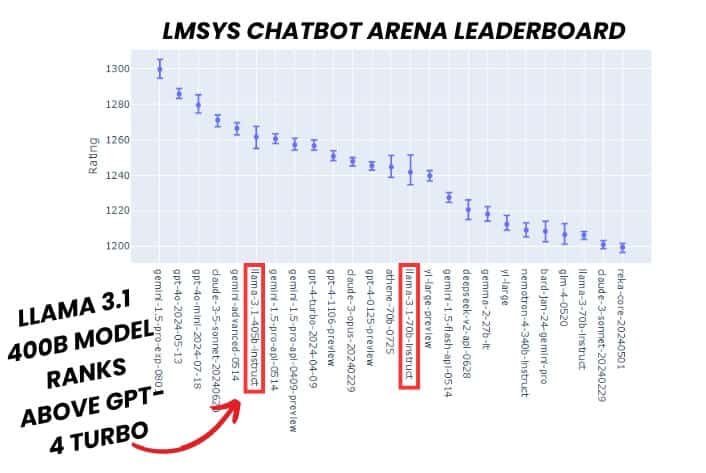

Llama 3.1 key features include an extended context length of 128,000 tokens, multilingual support and new safety measures. The model is very good at AI tasks like reasoning and coding. The model is already ahead of models like GPT-4 Turbo in the HuggingFace LLM leaderboard arena (Meta, Unite.ai, Hugginface).

Figure. 1: Huggingface's LMSYS Chatbot Arena Leaderboard. LMSYS Chatbot Arena is a crowdsourced open platform for LLM evals. It collected over 1,000,000 human pairwise comparisons to rank LLMs with the Bradley-Terry model and display the model ratings in Elo-scale. Source: https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard

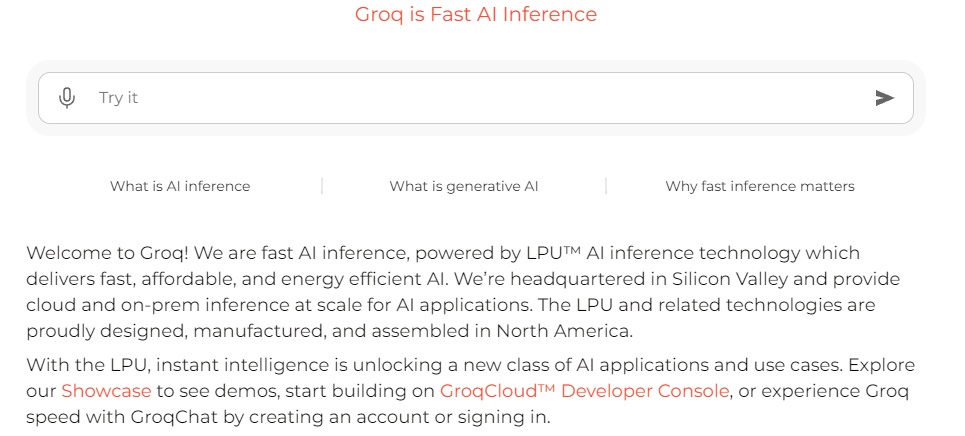

3. Groq

Groq is a hardware and software platform that delivers exceptionally fast AI inference, particularly for computationally intensive applications with sequential components like LLMs and generative AI.

Key points about Groq:

- It uses a custom chip called the LPU (Language Processing Unit) that overcomes the compute density and memory bandwidth bottlenecks of GPUs for LLMs, enabling orders of magnitude better performance.

- Groq supports standard ML frameworks like PyTorch and TensorFlow for inference, and offers the GroqWare suite for custom development.

- Groq Cloud provides access to popular open-source LLMs like Meta’s Llama 2 70B, running up to 18x faster than other providers.

Users can get started with Groq for free. You can immediately access a number of models such as Llama, Mixtral and Gemma, either via the API or their user interface.

Figure. 2: Groq's AI inference platform overview.

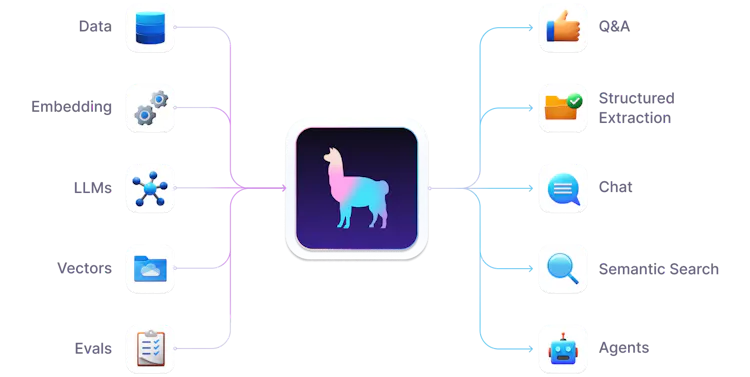

4. LlamaIndex

LlamaIndex is a simple, flexible data framework for connecting custom data sources to LLMs to build powerful Retrieval Augmented Generation (RAG) applications. It provides tools for ingesting data from various sources, indexing it for efficient retrieval, and querying the data using natural language to create applications like chatbots, question-answering systems, and intelligent agent.

Key features of LlamaIndex include:

- Support for ingesting data from 160+ sources including APIs, databases, documents, etc.

- Indexing data using 40+ vector stores, document stores, graph stores, and SQL databases.

- Querying indexed data using LLMs through prompt chains, RAG, and agents.

- Evaluating LLM performance with retrieval and response quality metrics.

Figure. 3: Diagram of the various components and functionalities of LlamaIndex.

5. Python Implementation

5.1 Install and Load Libraries

To begin, install the required Python libraries. These libraries support various functionalities, from data scraping to document conversion and processing.

- Llama-Index: creates and manages vector indices from documents.

- Llama-Index-LLMs-Groq: Integrates Llama 3.1 with Groq.

- HuggingFace Embeddings: To use pre-trained models from HuggingFace to generate embeddings

- Python-Docx and Pypandoc: These libraries handle document creation and conversion.

- PDFKit and Wkhtmltopdf: These tools convert HTML content to PDF. They are particularly useful for saving web-scraped data in a readable format.

%pip install llama-index==0.10.18 llama-index-llms-groq==0.1.3 groq==0.4.2 llama-index-embeddings-huggingface==0.2.0

!pip install python-docx pypandoc

!which pandoc

!apt-get install pandoc

!apt-get install texlive texlive-xetex texlive-latex-extra

!pip install pdfkit

!apt-get install wkhtmltopdf

!pip install pymupdf

Next, load the libraries and suppress unnecessary warnings for a cleaner output.

from llama_index.core import (

VectorStoreIndex,

SimpleDirectoryReader,

StorageContext,

ServiceContext,

load_index_from_storage

)

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

from llama_index.core.node_parser import SentenceSplitter

from llama_index.llms.groq import Groq

import warnings

warnings.filterwarnings('ignore')

from pprint import pprint

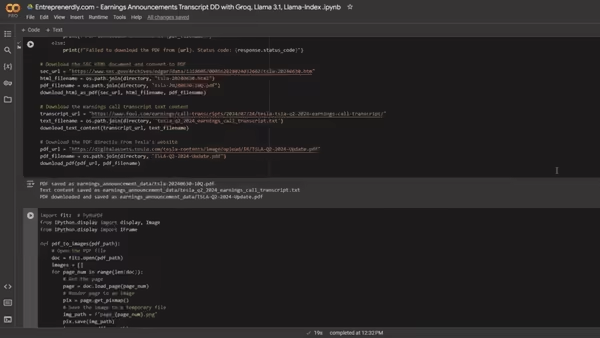

5.2 Getting Earnings Announcements Reports

This section details a Python-based approach to download and process earnings report such data. Downloading and parsing data from the SEC can be tricky due to rate limits and complex HTML structures. The function converts the HTML to PDF for easier review and archival.

Finding transcripts can be challenging as they are often buried in investor relations sections or within news articles. This function mimics a browser request to avoid blocks and then parses the content into a format we can feed to llamaindex.

Scraping data is a sensitive matter, especially for monetary reasons. Users are advised to download the data manually and place it in the folder called “earnings_announcement_data.”

import os

import requests

import pdfkit

from bs4 import BeautifulSoup

# Create a directory for saving the files

directory = "earnings_announcement_data"

if not os.path.exists(directory):

os.makedirs(directory)

# Function to download and save HTML as PDF

def download_html_as_pdf(url, html_filename, pdf_filename):

# Set a custom user agent to declare your traffic

headers = {

'User-Agent': 'YourCompanyName (you@example.com)',

'Accept-Encoding': 'gzip, deflate',

'Host': 'www.sec.gov'

}

# Download the HTML content

response = requests.get(url, headers=headers)

html_content = response.content.decode('utf-8')

# Save the HTML content to a file

with open(html_filename, "w", encoding='utf-8') as file:

file.write(html_content)

# Convert the HTML file to PDF

pdfkit.from_file(html_filename, pdf_filename)

print(f"PDF saved as {pdf_filename}")

# Function to download and save text content from a webpage

def download_text_content(url, text_filename):

# Set headers to mimic a real browser request

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

# Send a GET request to the URL

response = requests.get(url, headers=headers)

# Parse the HTML content

soup = BeautifulSoup(response.content, 'html.parser')

# Find the main content container

article_content = soup.find('div', {'class': 'article-body'})

# Extract and save the text content

if article_content:

text_content = article_content.get_text(separator='\n')

with open(text_filename, 'w', encoding='utf-8') as file:

file.write(text_content)

print(f"Text content saved as {text_filename}")

else:

print(f"Could not find the article content on the page {url}")

# Function to download a PDF file directly from a URL

def download_pdf(url, pdf_filename):

response = requests.get(url)

if response.status_code == 200:

with open(pdf_filename, 'wb') as file:

file.write(response.content)

print(f"PDF downloaded and saved as {pdf_filename}")

else:

print(f"Failed to download the PDF from {url}. Status code: {response.status_code}")

# Download the SEC HTML document and convert to PDF

sec_url = "https://www.sec.gov/Archives/edgar/data/1318605/000162828024032662/tsla-20240630.htm"

html_filename = os.path.join(directory, "tsla-20240630.html")

pdf_filename = os.path.join(directory, "tsla-20240630-10Q.pdf")

download_html_as_pdf(sec_url, html_filename, pdf_filename)

# Download the earnings call transcript text content

transcript_url = "https://www.fool.com/earnings/call-transcripts/2024/07/24/tesla-tsla-q2-2024-earnings-call-transcript/"

text_filename = os.path.join(directory, 'tesla_q2_2024_earnings_call_transcript.txt')

download_text_content(transcript_url, text_filename)

# Download the PDF directly from Tesla's website

pdf_url = "https://digitalassets.tesla.com/tesla-contents/image/upload/IR/TSLA-Q2-2024-Update.pdf"

pdf_filename = os.path.join(directory, "TSLA-Q2-2024-Update.pdf")

download_pdf(pdf_url, pdf_filename)

Powered By EmbedPress

Also worth reading:

Financial Due Diligence With LLMs And AI

Newsletter